Two are Better than One: Joint Entity and Relation Extraction with Table-Sequence Encoders

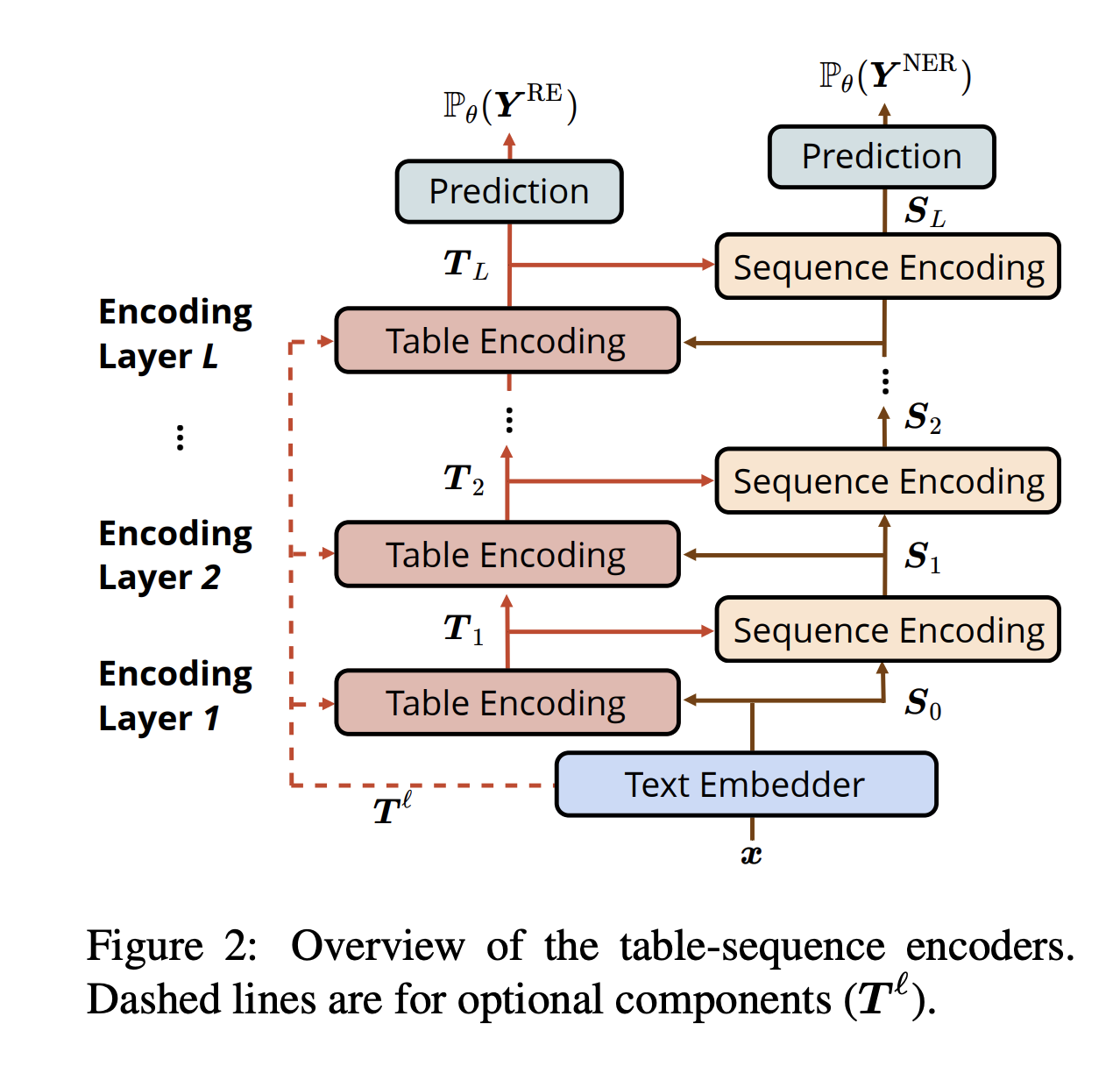

Named entity recognition and relation extraction are two important fundamental problems. Joint learning algorithms have been proposed to solve both tasks simultaneously, and many of them cast the joint task as a table-filling problem. However, they typically focused on learning a single encoder (usually learning representation in the form of a table) to capture information required for both tasks within the same space. We argue that it can be beneficial to design two distinct encoders to capture such two different types of information in the learning process. In this work, we propose the novel {\em table-sequence encoders} where two different encoders -- a table encoder and a sequence encoder are designed to help each other in the representation learning process. Our experiments confirm the advantages of having {\em two} encoders over {\em one} encoder. On several standard datasets, our model shows significant improvements over existing approaches.

PDF Abstract EMNLP 2020 PDF EMNLP 2020 Abstract

FewRel

FewRel

ACE 2005

ACE 2005