Translating Visual Art into Music

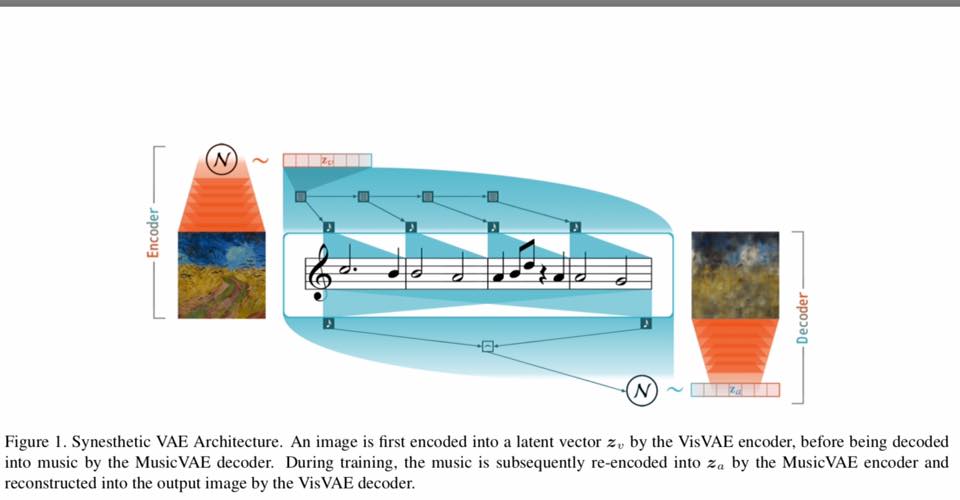

The Synesthetic Variational Autoencoder (SynVAE) introduced in this research is able to learn a consistent mapping between visual and auditive sensory modalities in the absence of paired datasets. A quantitative evaluation on MNIST as well as the Behance Artistic Media dataset (BAM) shows that SynVAE is capable of retaining sufficient information content during the translation while maintaining cross-modal latent space consistency. In a qualitative evaluation trial, human evaluators were furthermore able to match musical samples with the images which generated them with accuracies of up to 73%.

PDF Abstract

CIFAR-10

CIFAR-10

MNIST

MNIST

BAM!

BAM!