Transformers for molecular property prediction: Lessons learned from the past five years

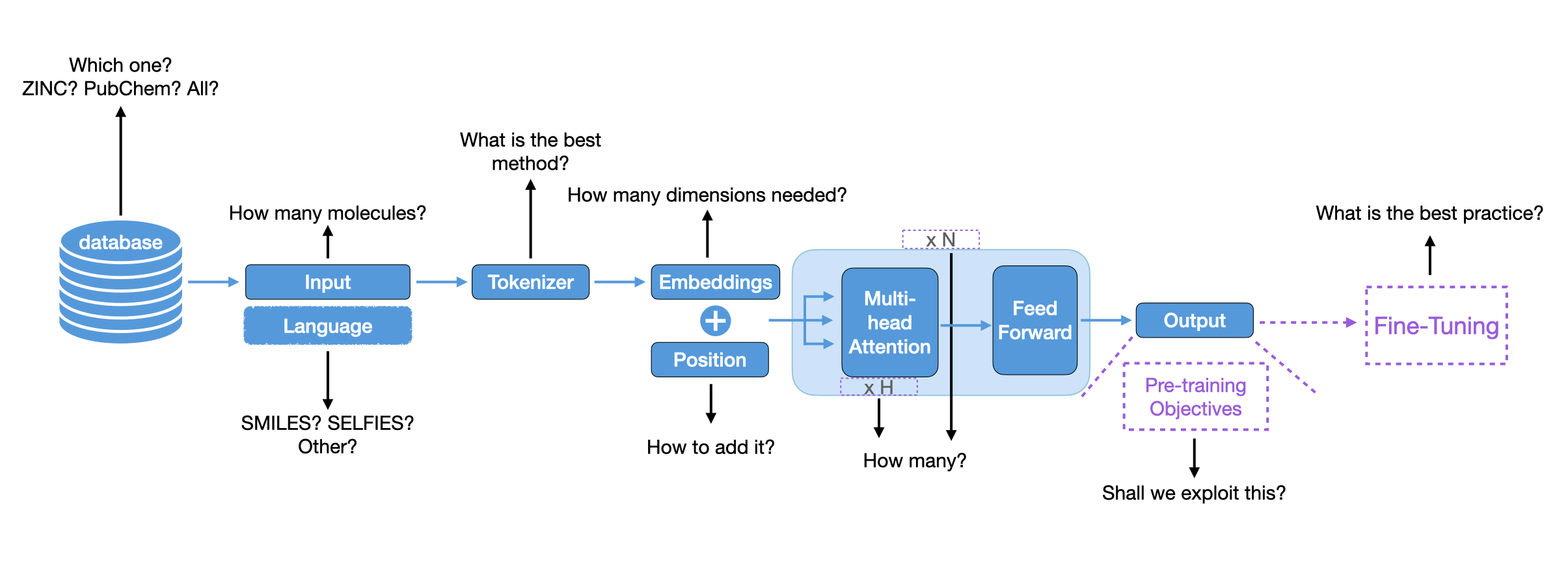

Molecular Property Prediction (MPP) is vital for drug discovery, crop protection, and environmental science. Over the last decades, diverse computational techniques have been developed, from using simple physical and chemical properties and molecular fingerprints in statistical models and classical machine learning to advanced deep learning approaches. In this review, we aim to distill insights from current research on employing transformer models for MPP. We analyze the currently available models and explore key questions that arise when training and fine-tuning a transformer model for MPP. These questions encompass the choice and scale of the pre-training data, optimal architecture selections, and promising pre-training objectives. Our analysis highlights areas not yet covered in current research, inviting further exploration to enhance the field's understanding. Additionally, we address the challenges in comparing different models, emphasizing the need for standardized data splitting and robust statistical analysis.

PDF Abstract

MoleculeNet

MoleculeNet