Training Generative Adversarial Networks with Limited Data

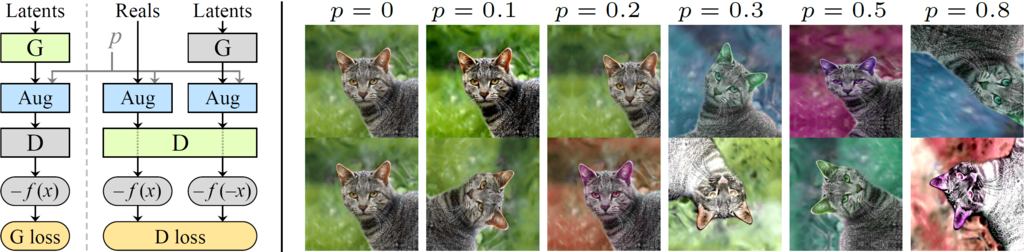

Training generative adversarial networks (GAN) using too little data typically leads to discriminator overfitting, causing training to diverge. We propose an adaptive discriminator augmentation mechanism that significantly stabilizes training in limited data regimes. The approach does not require changes to loss functions or network architectures, and is applicable both when training from scratch and when fine-tuning an existing GAN on another dataset. We demonstrate, on several datasets, that good results are now possible using only a few thousand training images, often matching StyleGAN2 results with an order of magnitude fewer images. We expect this to open up new application domains for GANs. We also find that the widely used CIFAR-10 is, in fact, a limited data benchmark, and improve the record FID from 5.59 to 2.42.

PDF Abstract NeurIPS 2020 PDF NeurIPS 2020 AbstractCode

Datasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Image Generation | AFHQ Cat | StyleGAN2-ADA | clean-KID | 0.71 ± .02 | # 2 | |

| clean-FID | 3.28 ± .02 | # 2 | ||||

| FID | 3.55 | # 4 | ||||

| Image Generation | AFHQ Dog | StyleGAN2-ADA | clean-KID | 1.28 ± .02 | # 2 | |

| clean-FID | 7.61 ± .02 | # 2 | ||||

| FID | 7.41 | # 4 | ||||

| Image Generation | AFHQ Wild | StyleGAN2-ADA | clean-KID | 0.44 ± .01 | # 2 | |

| clean-FID | 3.00 ± .01 | # 2 | ||||

| FID | 3.05 | # 4 | ||||

| Conditional Image Generation | ArtBench-10 (32x32) | StyleGAN2 + ADA | FID | 2.625 | # 1 | |

| 10-shot image generation | Babies | TGAN + ADA | FID | 97.91 | # 6 | |

| Conditional Image Generation | CIFAR-10 | StyleGAN2-ADA | Inception score | 10.14 | # 3 | |

| FID | 2.42 | # 3 | ||||

| Image Generation | FFHQ 1024 x 1024 | StyleGAN2 ADA+bCR | FID | 3.62 | # 10 | |

| Image Generation | FFHQ 256 x 256 | StyleGAN2 + ADA (DINOv2) | FD | 514.78 | # 8 | |

| Precision | 0.59 | # 9 | ||||

| Recall | 0.06 | # 10 | ||||

| Image Generation | FFHQ 256 x 256 | StyleGAN2 + ADA | FID | 3.62 | # 13 | |

| Image Generation | Pokemon 256x256 | StyleGAN2-ADA | FID | 40.38 | # 3 |

MetFaces

MetFaces

CIFAR-10

CIFAR-10

FFHQ

FFHQ

AFHQ

AFHQ

ArtBench-10 (32x32)

ArtBench-10 (32x32)