Towards Efficient Visual Adaption via Structural Re-parameterization

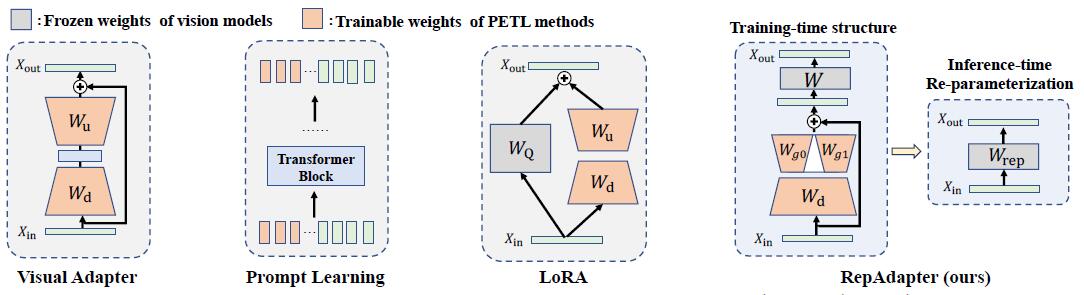

Parameter-efficient transfer learning (PETL) is an emerging research spot aimed at inexpensively adapting large-scale pre-trained models to downstream tasks. Recent advances have achieved great success in saving storage costs for various pre-trained models by updating a small number of parameters instead of full tuning. However, we notice that most existing PETL methods still incur non-negligible latency during inference. In this paper, we propose a parameter-efficient and computational friendly adapter for giant vision models, called RepAdapter. Specifically, we first prove that common adaptation modules can also be seamlessly integrated into most giant vision models via our structural re-parameterization, thereby achieving zero-cost during inference. We then investigate the sparse design and effective placement of adapter structure, helping our RepAdaper obtain other advantages in terms of parameter efficiency and performance. To validate RepAdapter, we conduct extensive experiments on 27 benchmark datasets of three vision tasks, i.e., image and video classifications and semantic segmentation. Experimental results show the superior performance and efficiency of RepAdapter than the state-of-the-art PETL methods. For instance, RepAdapter outperforms full tuning by +7.2% on average and saves up to 25% training time, 20% GPU memory, and 94.6% storage cost of ViT-B/16 on VTAB-1k. The generalization ability of RepAdapter is also well validated by a bunch of vision models. Our source code is released at https://github.com/luogen1996/RepAdapter.

PDF Abstract

ImageNet

ImageNet

HMDB51

HMDB51

ImageNet-A

ImageNet-A