Multi-branch and Multi-scale Attention Learning for Fine-Grained Visual Categorization

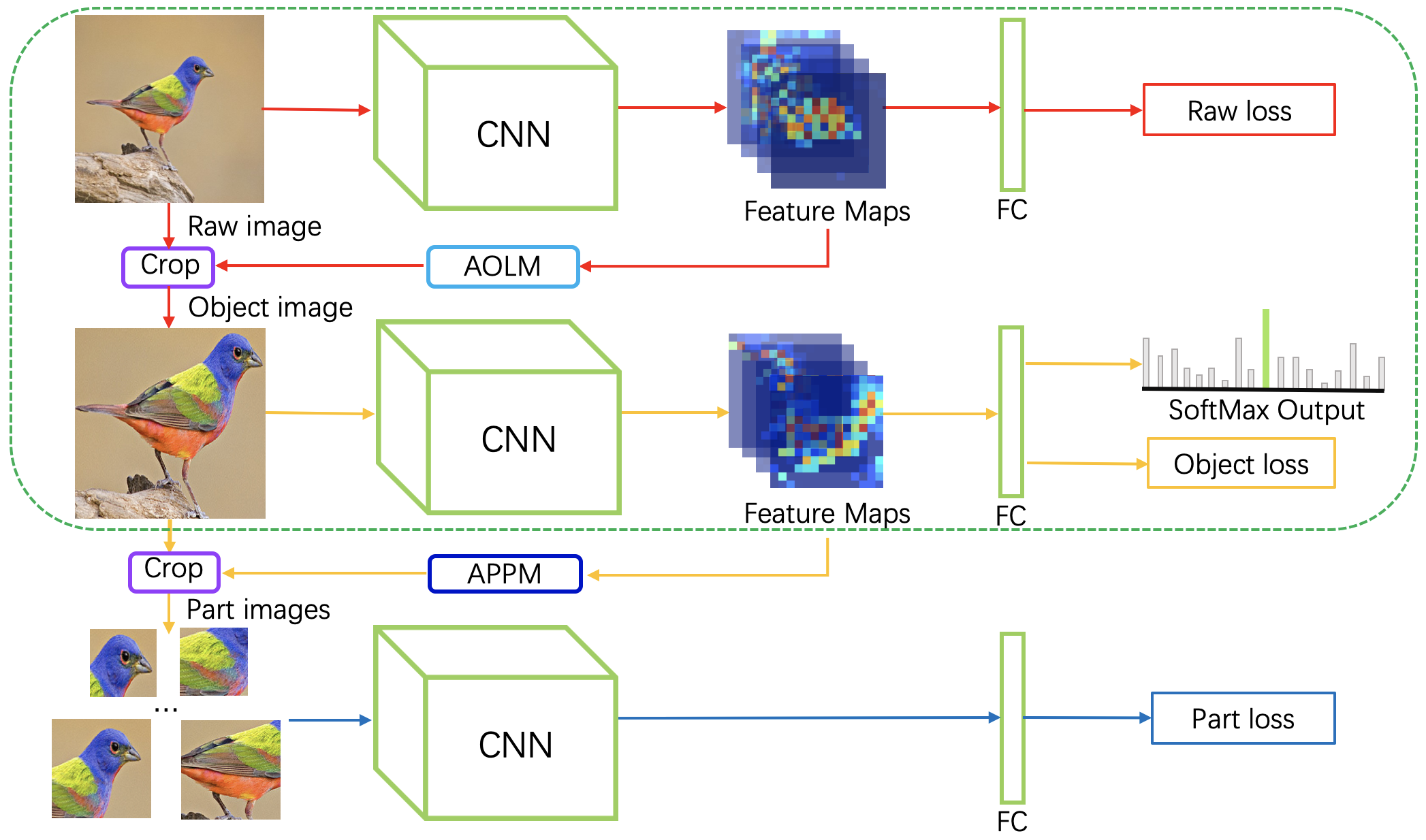

ImageNet Large Scale Visual Recognition Challenge (ILSVRC) is one of the most authoritative academic competitions in the field of Computer Vision (CV) in recent years. But applying ILSVRC's annual champion directly to fine-grained visual categorization (FGVC) tasks does not achieve good performance. To FGVC tasks, the small inter-class variations and the large intra-class variations make it a challenging problem. Our attention object location module (AOLM) can predict the position of the object and attention part proposal module (APPM) can propose informative part regions without the need of bounding-box or part annotations. The obtained object images not only contain almost the entire structure of the object, but also contains more details, part images have many different scales and more fine-grained features, and the raw images contain the complete object. The three kinds of training images are supervised by our multi-branch network. Therefore, our multi-branch and multi-scale learning network(MMAL-Net) has good classification ability and robustness for images of different scales. Our approach can be trained end-to-end, while provides short inference time. Through the comprehensive experiments demonstrate that our approach can achieves state-of-the-art results on CUB-200-2011, FGVC-Aircraft and Stanford Cars datasets. Our code will be available at https://github.com/ZF1044404254/MMAL-Net

PDF Abstract

CUB-200-2011

CUB-200-2011

Stanford Cars

Stanford Cars

FGVC-Aircraft

FGVC-Aircraft