The Weighted Tsetlin Machine: Compressed Representations with Weighted Clauses

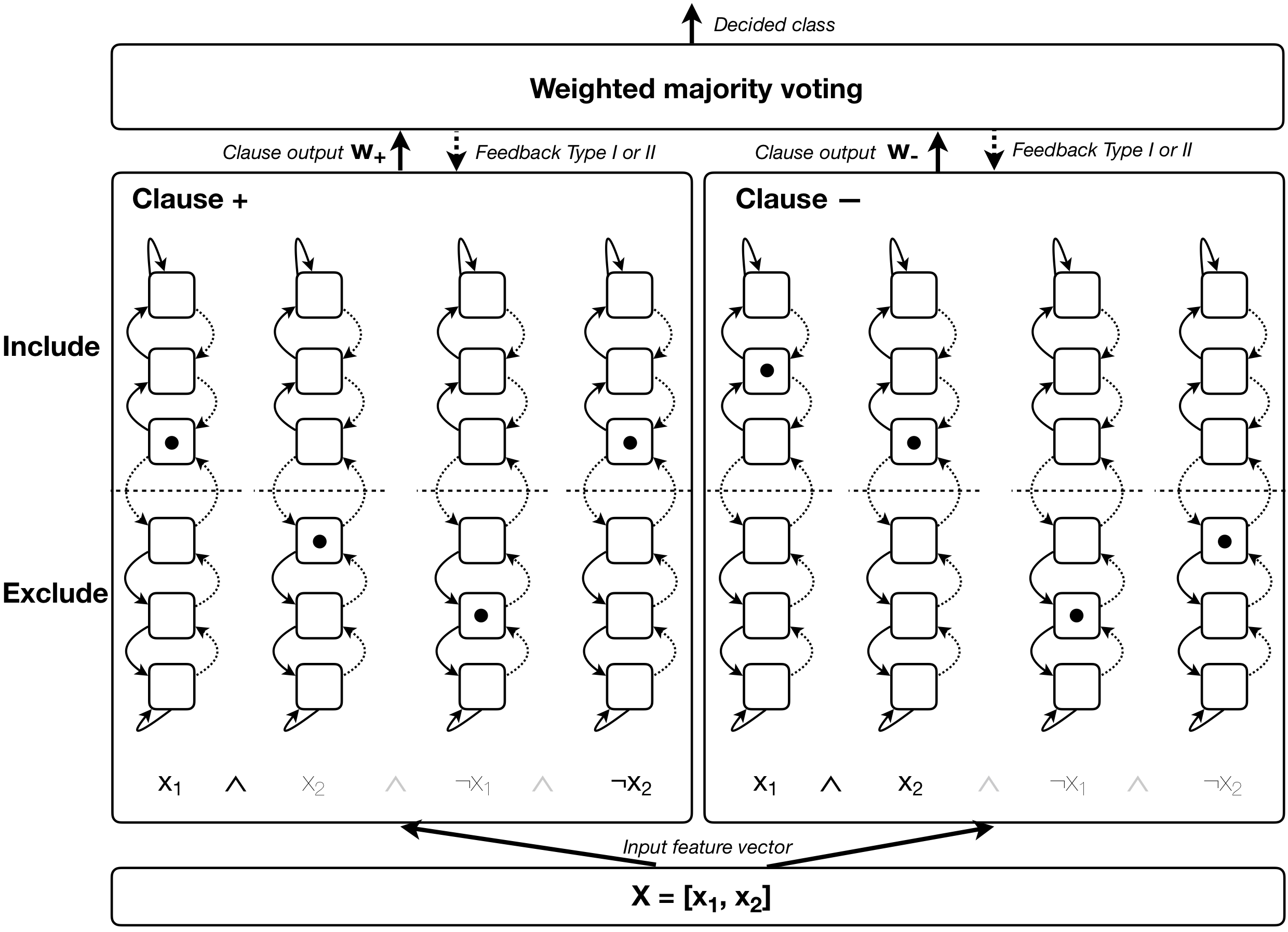

The Tsetlin Machine (TM) is an interpretable mechanism for pattern recognition that constructs conjunctive clauses from data. The clauses capture frequent patterns with high discriminating power, providing increasing expression power with each additional clause. However, the resulting accuracy gain comes at the cost of linear growth in computation time and memory usage. In this paper, we present the Weighted Tsetlin Machine (WTM), which reduces computation time and memory usage by weighting the clauses. Real-valued weighting allows one clause to replace multiple, and supports fine-tuning the impact of each clause. Our novel scheme simultaneously learns both the composition of the clauses and their weights. Furthermore, we increase training efficiency by replacing $k$ Bernoulli trials of success probability $p$ with a uniform sample of average size $p k$, the size drawn from a binomial distribution. In our empirical evaluation, the WTM achieved the same accuracy as the TM on MNIST, IMDb, and Connect-4, requiring only $1/4$, $1/3$, and $1/50$ of the clauses, respectively. With the same number of clauses, the WTM outperformed the TM, obtaining peak test accuracies of respectively $98.63\%$, $90.37\%$, and $87.91\%$. Finally, our novel sampling scheme reduced sample generation time by a factor of $7$.

PDF Abstract

MNIST

MNIST

IMDb Movie Reviews

IMDb Movie Reviews