The Spotlight: A General Method for Discovering Systematic Errors in Deep Learning Models

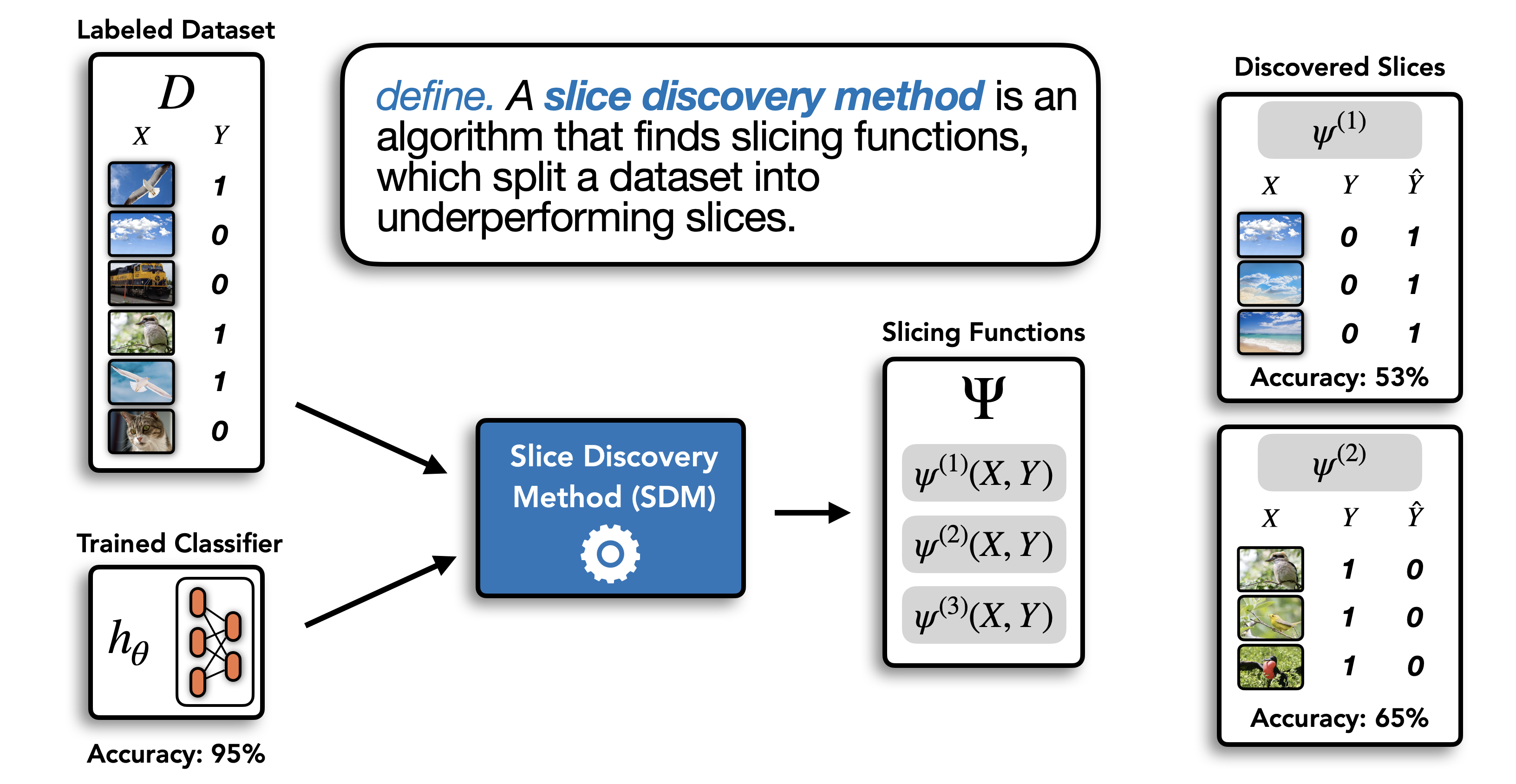

Supervised learning models often make systematic errors on rare subsets of the data. When these subsets correspond to explicit labels in the data (e.g., gender, race) such poor performance can be identified straightforwardly. This paper introduces a method for discovering systematic errors that do not correspond to such explicitly labelled subgroups. The key idea is that similar inputs tend to have similar representations in the final hidden layer of a neural network. We leverage this structure by "shining a spotlight" on this representation space to find contiguous regions where the model performs poorly. We show that the spotlight surfaces semantically meaningful areas of weakness in a wide variety of existing models spanning computer vision, NLP, and recommender systems.

PDF Abstract

SQuAD

SQuAD

MovieLens

MovieLens