The AdobeIndoorNav Dataset: Towards Deep Reinforcement Learning based Real-world Indoor Robot Visual Navigation

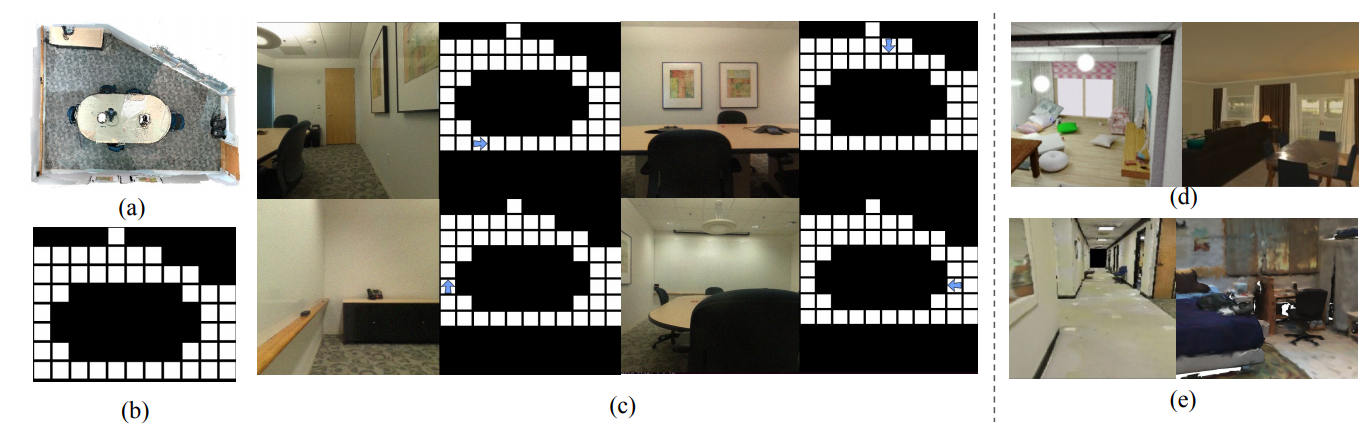

Deep reinforcement learning (DRL) demonstrates its potential in learning a model-free navigation policy for robot visual navigation. However, the data-demanding algorithm relies on a large number of navigation trajectories in training. Existing datasets supporting training such robot navigation algorithms consist of either 3D synthetic scenes or reconstructed scenes. Synthetic data suffers from domain gap to the real-world scenes while visual inputs rendered from 3D reconstructed scenes have undesired holes and artifacts. In this paper, we present a new dataset collected in real-world to facilitate the research in DRL based visual navigation. Our dataset includes 3D reconstruction for real-world scenes as well as densely captured real 2D images from the scenes. It provides high-quality visual inputs with real-world scene complexity to the robot at dense grid locations. We further study and benchmark one recent DRL based navigation algorithm and present our attempts and thoughts on improving its generalizability to unseen test targets in the scenes.

PDF Abstract

AdobeIndoorNav

AdobeIndoorNav

ScanNet

ScanNet

Matterport3D

Matterport3D

AI2-THOR

AI2-THOR

SceneNet

SceneNet