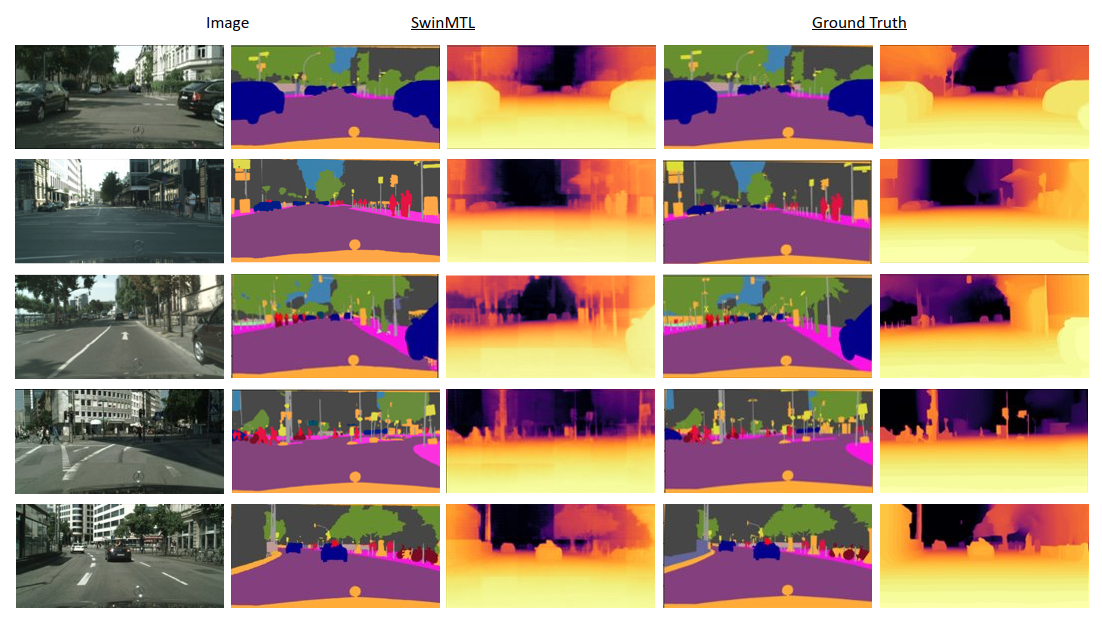

SwinMTL: A Shared Architecture for Simultaneous Depth Estimation and Semantic Segmentation from Monocular Camera Images

This research paper presents an innovative multi-task learning framework that allows concurrent depth estimation and semantic segmentation using a single camera. The proposed approach is based on a shared encoder-decoder architecture, which integrates various techniques to improve the accuracy of the depth estimation and semantic segmentation task without compromising computational efficiency. Additionally, the paper incorporates an adversarial training component, employing a Wasserstein GAN framework with a critic network, to refine model's predictions. The framework is thoroughly evaluated on two datasets - the outdoor Cityscapes dataset and the indoor NYU Depth V2 dataset - and it outperforms existing state-of-the-art methods in both segmentation and depth estimation tasks. We also conducted ablation studies to analyze the contributions of different components, including pre-training strategies, the inclusion of critics, the use of logarithmic depth scaling, and advanced image augmentations, to provide a better understanding of the proposed framework. The accompanying source code is accessible at \url{https://github.com/PardisTaghavi/SwinMTL}.

PDF AbstractDatasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Monocular Depth Estimation | Cityscapes | SwinMTL | Absolute relative error (AbsRel) | 0.089 | # 1 | |

| RMSE | 5.481 | # 1 | ||||

| RMSE log | 0.139 | # 1 | ||||

| Square relative error (SqRel) | 1.051 | # 1 | ||||

| Semantic Segmentation | NYU Depth v2 | SwinMTL | Mean IoU | 58.14% | # 4 | |

| Multi-Task Learning | NYUv2 | SwinMTL | Mean IoU | 58.14 | # 1 |

Cityscapes

Cityscapes

NYUv2

NYUv2