SVSGAN: Singing Voice Separation via Generative Adversarial Network

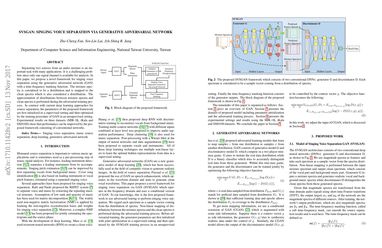

Separating two sources from an audio mixture is an important task with many applications. It is a challenging problem since only one signal channel is available for analysis. In this paper, we propose a novel framework for singing voice separation using the generative adversarial network (GAN) with a time-frequency masking function. The mixture spectra is considered to be a distribution and is mapped to the clean spectra which is also considered a distribtution. The approximation of distributions between mixture spectra and clean spectra is performed during the adversarial training process. In contrast with current deep learning approaches for source separation, the parameters of the proposed framework are first initialized in a supervised setting and then optimized by the training procedure of GAN in an unsupervised setting. Experimental results on three datasets (MIR-1K, iKala and DSD100) show that performance can be improved by the proposed framework consisting of conventional networks.

PDF Abstract