Structured Chemistry Reasoning with Large Language Models

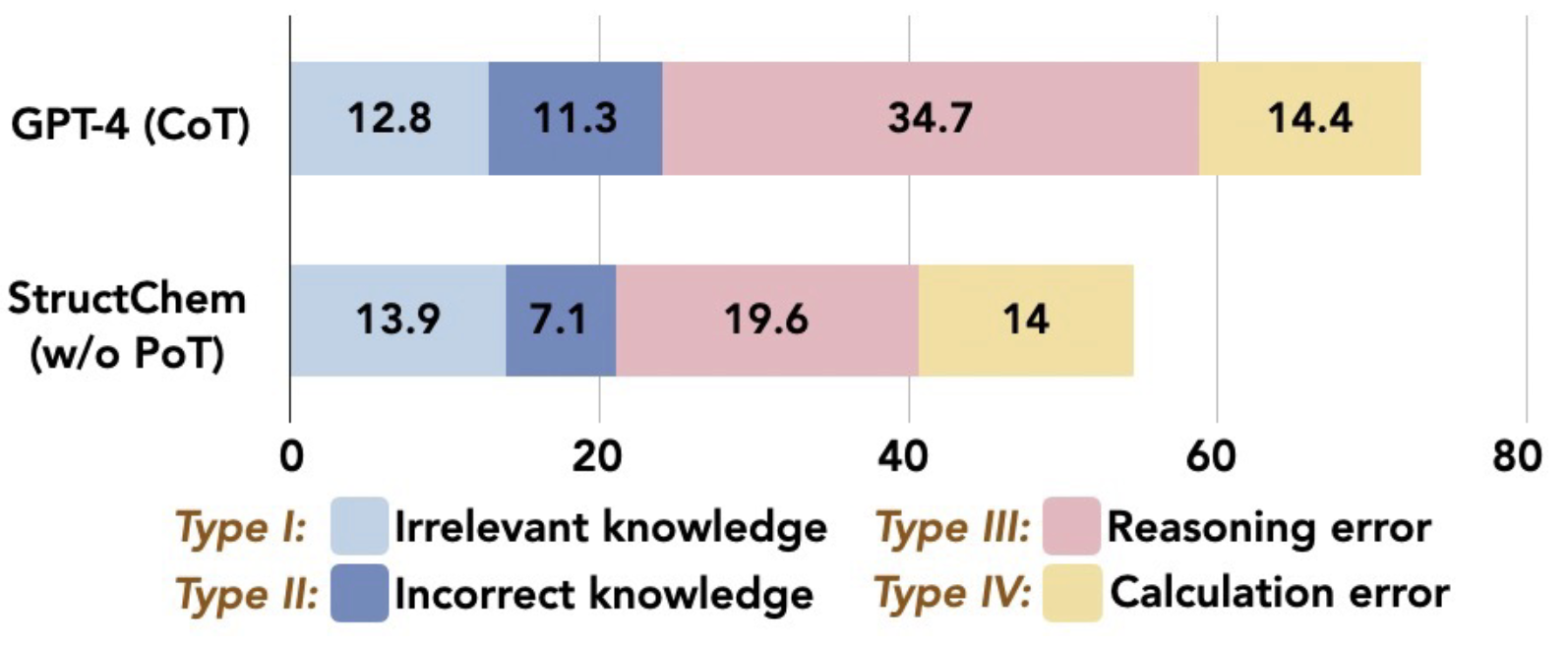

Large Language Models (LLMs) excel in diverse areas, yet struggle with complex scientific reasoning, especially in the field of chemistry. Different from the simple chemistry tasks (e.g., molecule classification) addressed in previous studies, complex chemistry problems require not only vast knowledge and precise calculation, but also compositional reasoning about rich dynamic interactions of different concepts (e.g., temperature changes). Our study shows that even advanced LLMs, like GPT-4, can fail easily in different ways. Interestingly, the errors often stem not from a lack of domain knowledge within the LLMs, but rather from the absence of an effective reasoning structure that guides the LLMs to elicit the right knowledge, incorporate the knowledge in step-by-step reasoning, and iteratively refine results for further improved quality. On this basis, we introduce StructChem, a simple yet effective prompting strategy that offers the desired guidance and substantially boosts the LLMs' chemical reasoning capability. Testing across four chemistry areas -- quantum chemistry, mechanics, physical chemistry, and kinetics -- StructChem substantially enhances GPT-4's performance, with up to 30\% peak improvement. Our analysis also underscores the unique difficulties of precise grounded reasoning in science with LLMs, highlighting a need for more research in this area. Code is available at \url{https://github.com/ozyyshr/StructChem}.

PDF Abstract

SciBench

SciBench