Story Ending Generation with Incremental Encoding and Commonsense Knowledge

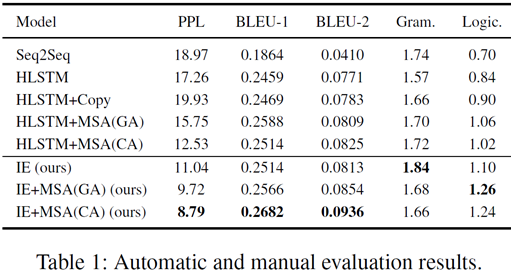

Generating a reasonable ending for a given story context, i.e., story ending generation, is a strong indication of story comprehension. This task requires not only to understand the context clues which play an important role in planning the plot but also to handle implicit knowledge to make a reasonable, coherent story. In this paper, we devise a novel model for story ending generation. The model adopts an incremental encoding scheme to represent context clues which are spanning in the story context. In addition, commonsense knowledge is applied through multi-source attention to facilitate story comprehension, and thus to help generate coherent and reasonable endings. Through building context clues and using implicit knowledge, the model is able to produce reasonable story endings. context clues implied in the post and make the inference based on it. Automatic and manual evaluation shows that our model can generate more reasonable story endings than state-of-the-art baselines.

PDF Abstract

ROCStories

ROCStories