SPTS v2: Single-Point Scene Text Spotting

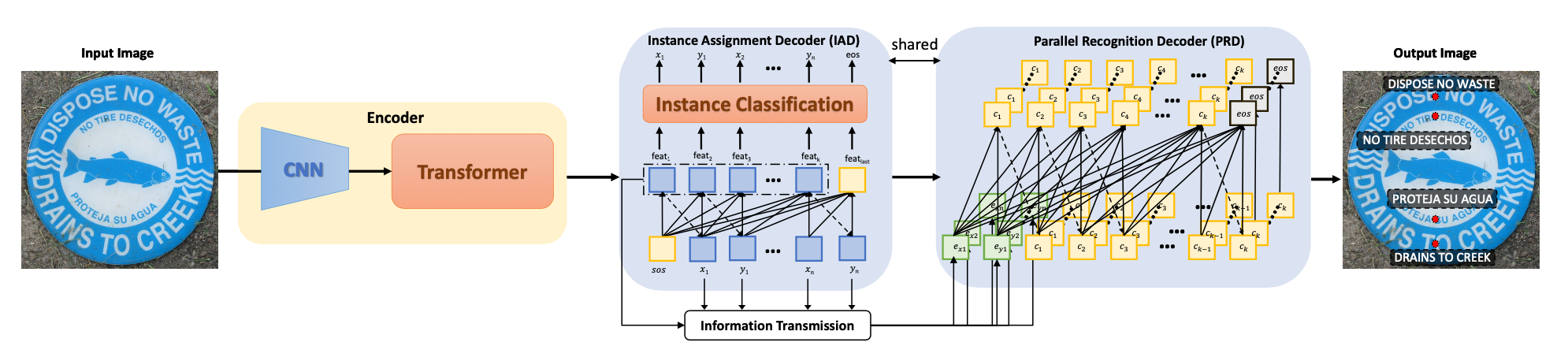

End-to-end scene text spotting has made significant progress due to its intrinsic synergy between text detection and recognition. Previous methods commonly regard manual annotations such as horizontal rectangles, rotated rectangles, quadrangles, and polygons as a prerequisite, which are much more expensive than using single-point. Our new framework, SPTS v2, allows us to train high-performing text-spotting models using a single-point annotation. SPTS v2 reserves the advantage of the auto-regressive Transformer with an Instance Assignment Decoder (IAD) through sequentially predicting the center points of all text instances inside the same predicting sequence, while with a Parallel Recognition Decoder (PRD) for text recognition in parallel, which significantly reduces the requirement of the length of the sequence. These two decoders share the same parameters and are interactively connected with a simple but effective information transmission process to pass the gradient and information. Comprehensive experiments on various existing benchmark datasets demonstrate the SPTS v2 can outperform previous state-of-the-art single-point text spotters with fewer parameters while achieving 19$\times$ faster inference speed. Within the context of our SPTS v2 framework, our experiments suggest a potential preference for single-point representation in scene text spotting when compared to other representations. Such an attempt provides a significant opportunity for scene text spotting applications beyond the realms of existing paradigms. Code is available at: https://github.com/Yuliang-Liu/SPTSv2.

PDF Abstract

ICDAR 2013

ICDAR 2013

Total-Text

Total-Text