Speaker-Aware BERT for Multi-Turn Response Selection in Retrieval-Based Chatbots

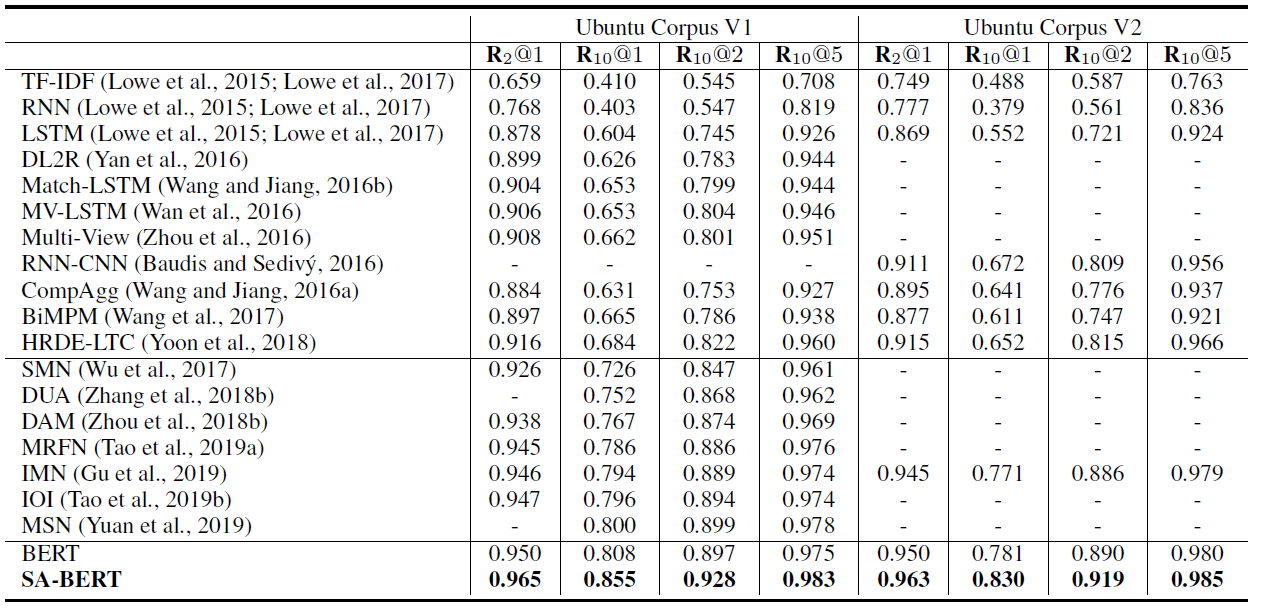

In this paper, we study the problem of employing pre-trained language models for multi-turn response selection in retrieval-based chatbots. A new model, named Speaker-Aware BERT (SA-BERT), is proposed in order to make the model aware of the speaker change information, which is an important and intrinsic property of multi-turn dialogues. Furthermore, a speaker-aware disentanglement strategy is proposed to tackle the entangled dialogues. This strategy selects a small number of most important utterances as the filtered context according to the speakers' information in them. Finally, domain adaptation is performed to incorporate the in-domain knowledge into pre-trained language models. Experiments on five public datasets show that our proposed model outperforms the present models on all metrics by large margins and achieves new state-of-the-art performances for multi-turn response selection.

PDF Abstract

UDC

UDC