Spatio-Temporal Tuples Transformer for Skeleton-Based Action Recognition

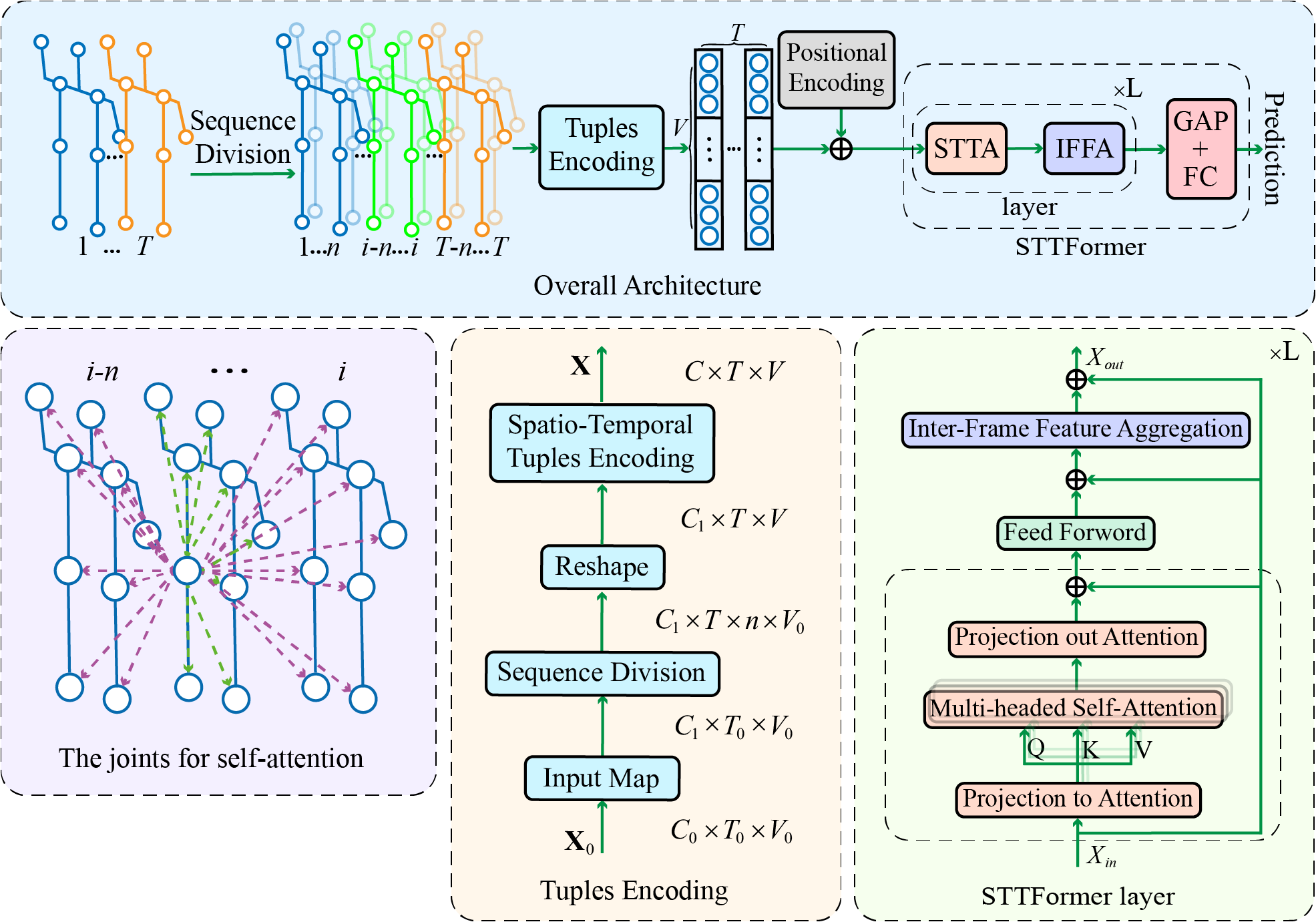

Capturing the dependencies between joints is critical in skeleton-based action recognition task. Transformer shows great potential to model the correlation of important joints. However, the existing Transformer-based methods cannot capture the correlation of different joints between frames, which the correlation is very useful since different body parts (such as the arms and legs in "long jump") between adjacent frames move together. Focus on this problem, A novel spatio-temporal tuples Transformer (STTFormer) method is proposed. The skeleton sequence is divided into several parts, and several consecutive frames contained in each part are encoded. And then a spatio-temporal tuples self-attention module is proposed to capture the relationship of different joints in consecutive frames. In addition, a feature aggregation module is introduced between non-adjacent frames to enhance the ability to distinguish similar actions. Compared with the state-of-the-art methods, our method achieves better performance on two large-scale datasets.

PDF Abstract

NTU RGB+D

NTU RGB+D