Soft Labels for Ordinal Regression

Ordinal regression attempts to solve classification problems in which categories are not independent, but rather follow a natural order. It is crucial to classify each class correctly while learning adequate interclass ordinal relationships. We present a simple and effective method that constrains these relationships among categories by seamlessly incorporating metric penalties into ground truth label representations. This encoding allows deep neural networks to automatically learn intraclass and interclass relationships without any explicit modification of the network architecture. Our method converts data labels into soft probability distributions that pair well with common categorical loss functions such as cross-entropy. We show that this approach is effective by using off-the-shelf classification and segmentation networks in four wildly different scenarios: image quality ranking, age estimation, horizon line regression, and monocular depth estimation. We demonstrate that our general-purpose method is very competitive with respect to specialized approaches, and adapts well to a variety of different network architectures and metrics.

PDF Abstract

Cityscapes

Cityscapes

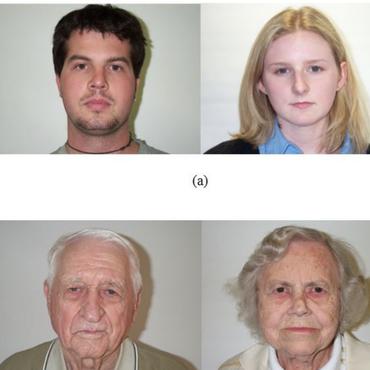

Adience

Adience

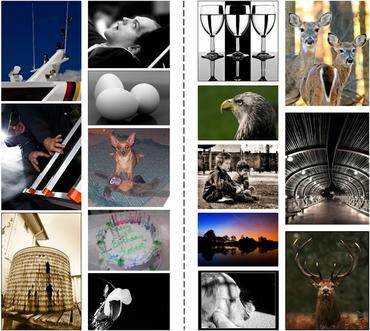

Image Aesthetics dataset

Image Aesthetics dataset