Sim2Real Bilevel Adaptation for Object Surface Classification using Vision-Based Tactile Sensors

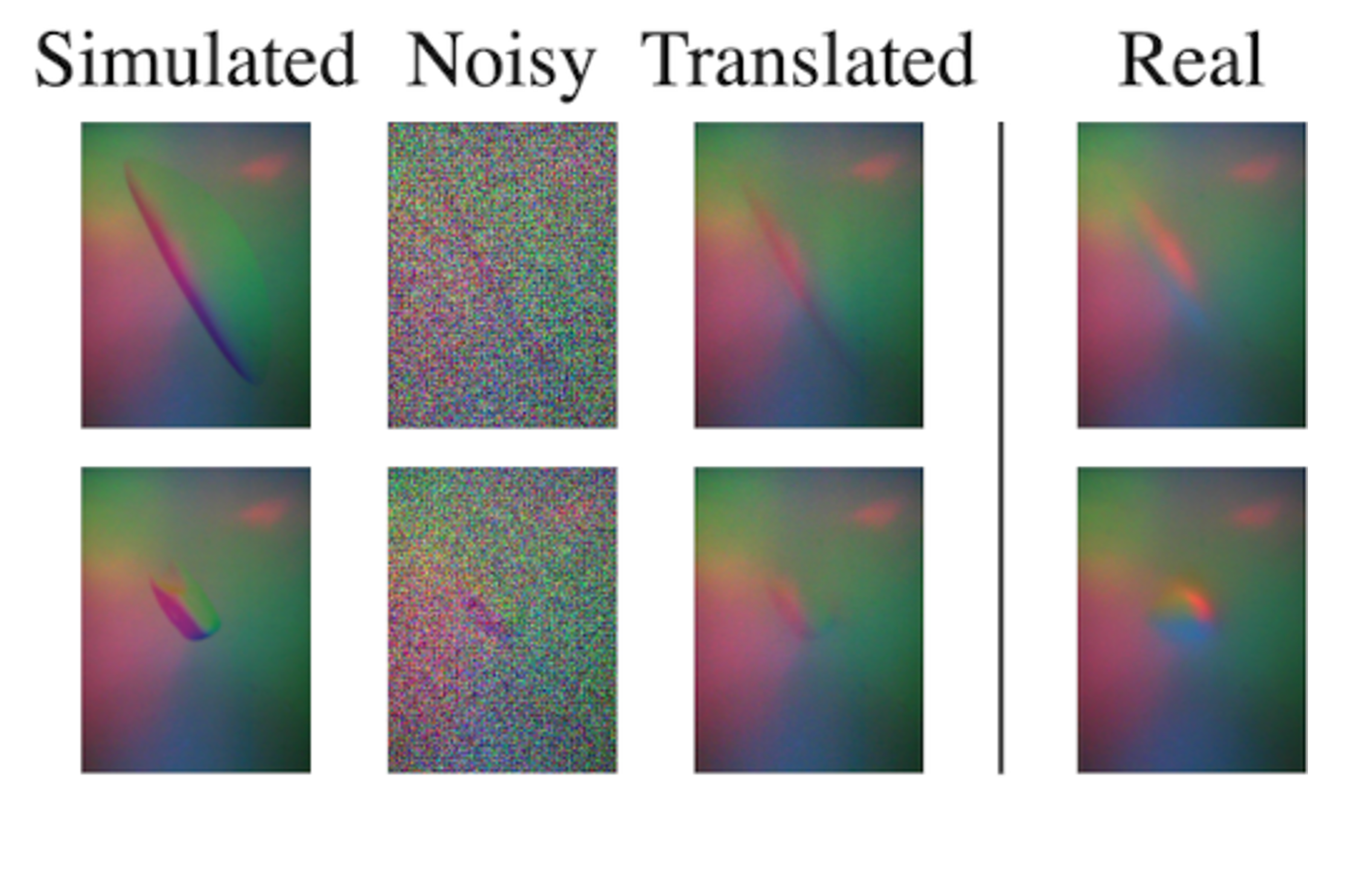

In this paper, we address the Sim2Real gap in the field of vision-based tactile sensors for classifying object surfaces. We train a Diffusion Model to bridge this gap using a relatively small dataset of real-world images randomly collected from unlabeled everyday objects via the DIGIT sensor. Subsequently, we employ a simulator to generate images by uniformly sampling the surface of objects from the YCB Model Set. These simulated images are then translated into the real domain using the Diffusion Model and automatically labeled to train a classifier. During this training, we further align features of the two domains using an adversarial procedure. Our evaluation is conducted on a dataset of tactile images obtained from a set of ten 3D printed YCB objects. The results reveal a total accuracy of 81.9%, a significant improvement compared to the 34.7% achieved by the classifier trained solely on simulated images. This demonstrates the effectiveness of our approach. We further validate our approach using the classifier on a 6D object pose estimation task from tactile data.

PDF Abstract