Shot2Story20K: A New Benchmark for Comprehensive Understanding of Multi-shot Videos

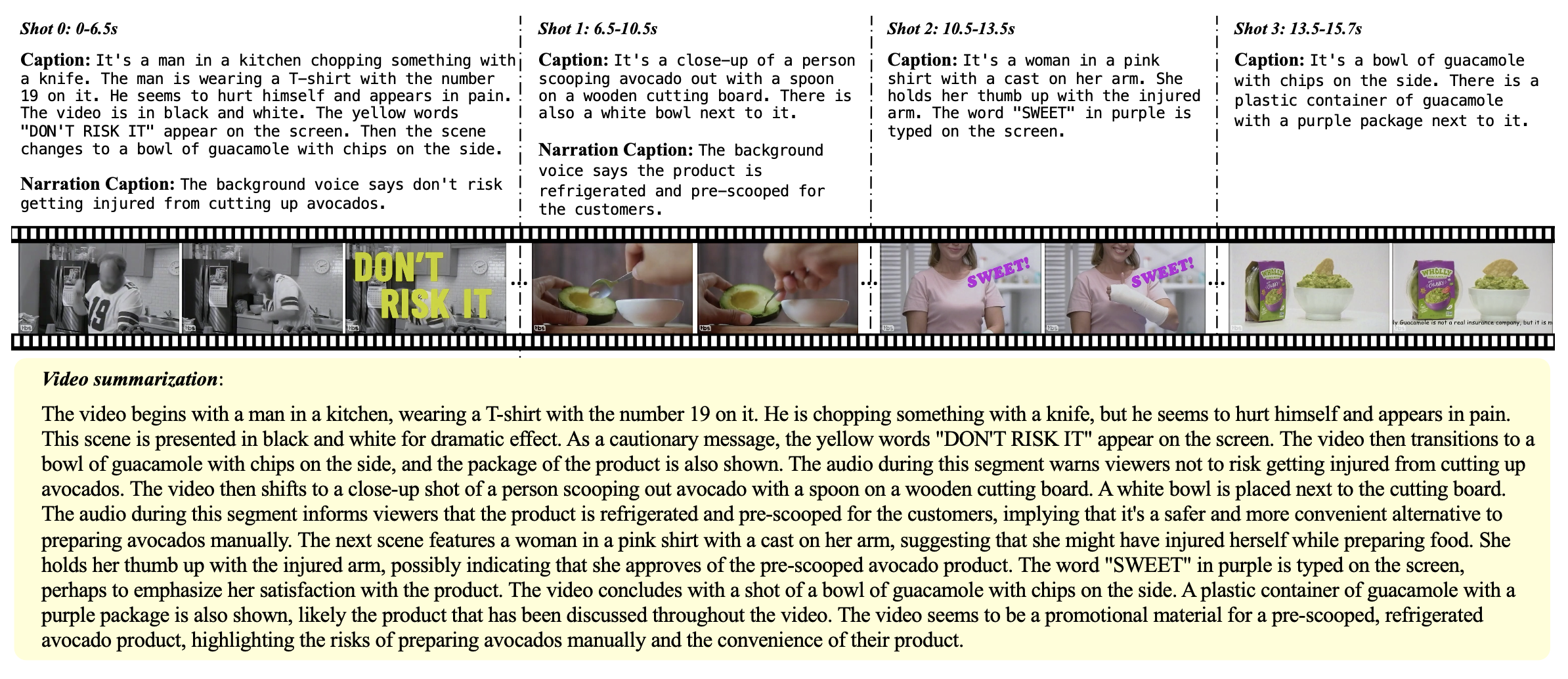

A short clip of video may contain progression of multiple events and an interesting story line. A human need to capture both the event in every shot and associate them together to understand the story behind it. In this work, we present a new multi-shot video understanding benchmark Shot2Story20K with detailed shot-level captions and comprehensive video summaries. To facilitate better semantic understanding of videos, we provide captions for both visual signals and human narrations. We design several distinct tasks including single-shot video and narration captioning, multi-shot video summarization, and video retrieval with shot descriptions. Preliminary experiments show some challenges to generate a long and comprehensive video summary. Nevertheless, the generated imperfect summaries can already significantly boost the performance of existing video understanding tasks such as video question-answering, promoting an under-explored setting of video understanding with detailed summaries.

PDF AbstractCode

Datasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Zero-Shot Video Question Answer | MSRVTT-QA | SUM-shot+Vicuna | Accuracy | 56.8 | # 10 | |

| video narration captioning | Shot2Story20K | Ours | METEOR | 24.8 | # 1 | |

| ROUGE | 39 | # 1 | ||||

| BLEU-4 | 18.8 | # 1 | ||||

| CIDEr | 168.7 | # 1 | ||||

| Video Captioning | Shot2Story20K | Ours | CIDEr | 37.4 | # 1 | |

| METEOR | 16.2 | # 1 | ||||

| ROUGE | 29.6 | # 1 | ||||

| BLEU-4 | 10.7 | # 1 | ||||

| Video Summarization | Shot2Story20K | SUM-shot | CIDEr | 8.6 | # 1 | |

| BLEU-4 | 11.7 | # 1 | ||||

| METEOR | 19.7 | # 1 | ||||

| ROUGE | 26.8 | # 1 |

Shot2Story20K

Shot2Story20K

MSR-VTT

MSR-VTT

ActivityNet-QA

ActivityNet-QA