Sharpness-Aware Minimization for Efficiently Improving Generalization

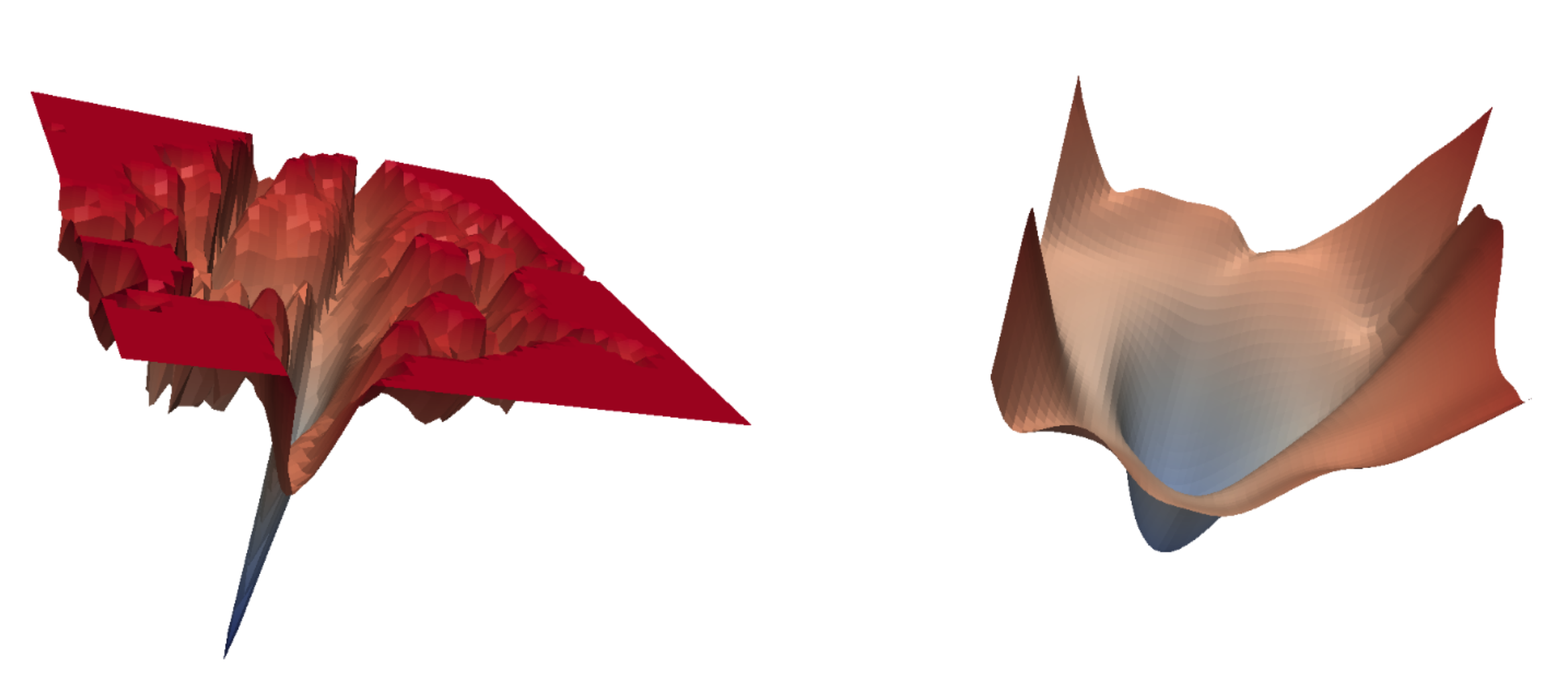

In today's heavily overparameterized models, the value of the training loss provides few guarantees on model generalization ability. Indeed, optimizing only the training loss value, as is commonly done, can easily lead to suboptimal model quality. Motivated by prior work connecting the geometry of the loss landscape and generalization, we introduce a novel, effective procedure for instead simultaneously minimizing loss value and loss sharpness. In particular, our procedure, Sharpness-Aware Minimization (SAM), seeks parameters that lie in neighborhoods having uniformly low loss; this formulation results in a min-max optimization problem on which gradient descent can be performed efficiently. We present empirical results showing that SAM improves model generalization across a variety of benchmark datasets (e.g., CIFAR-10, CIFAR-100, ImageNet, finetuning tasks) and models, yielding novel state-of-the-art performance for several. Additionally, we find that SAM natively provides robustness to label noise on par with that provided by state-of-the-art procedures that specifically target learning with noisy labels. We open source our code at \url{https://github.com/google-research/sam}.

PDF Abstract ICLR 2021 PDF ICLR 2021 AbstractCode

Results from the Paper

Ranked #1 on

Image Classification

on CIFAR-100

(using extra training data)

Ranked #1 on

Image Classification

on CIFAR-100

(using extra training data)

ImageNet

ImageNet

CIFAR-100

CIFAR-100

SVHN

SVHN

Fashion-MNIST

Fashion-MNIST

Oxford 102 Flower

Oxford 102 Flower

Stanford Cars

Stanford Cars

Food-101

Food-101

FGVC-Aircraft

FGVC-Aircraft

Birdsnap

Birdsnap