Shape Driven Kernel Adaptation in Convolutional Neural Network for Robust Facial Traits Recognition

One key challenge of facial traits recognition is the large non-rigid appearance variations due to irrelevant real world factors, such as viewpoint and expression changes. In this paper, we explore how the shape information, i.e. facial landmark positions, can be explicitly deployed into the popular Convolutional Neural Network (CNN) architecture to disentangling such irrelevant non-rigid appearance variations. First, instead of using fixed kernels, we propose a kernel adaptation method to dynamically determine the convolutional kernels according to the distribution of facial landmarks, which helps learning more robust features. Second, motivated by the intuition that different local facial regions may demand different adaptation functions, we further propose a tree-structured convolutional architecture to hierarchically fuse multiple local adaptive CNN subnetworks. Comprehensive experiments on WebFace, Morph II and MultiPIE databases well validate the effectiveness of the proposed kernel adaptation method and tree-structured convolutional architecture for facial traits recognition tasks, including identity, age and gender classification. For all the tasks, the proposed architecture consistently achieves the state-of-the-art performances.

PDF Abstract

Multi-PIE

Multi-PIE

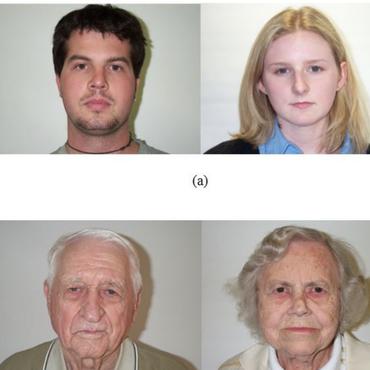

MORPH

MORPH