Self-Supervised Learning for Fine-Grained Visual Categorization

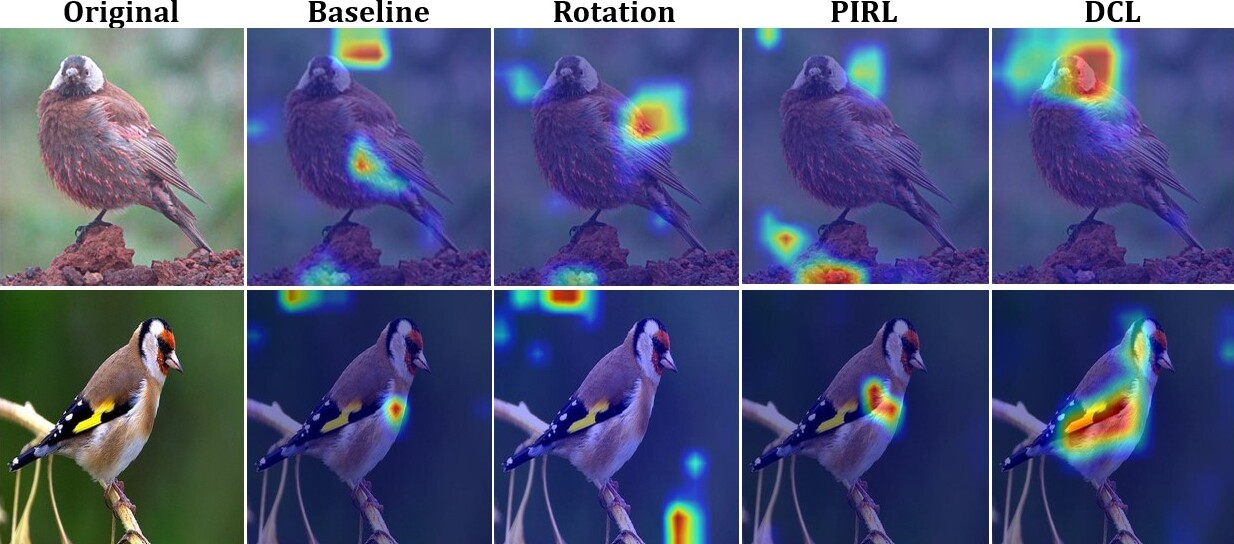

Recent research in self-supervised learning (SSL) has shown its capability in learning useful semantic representations from images for classification tasks. Through our work, we study the usefulness of SSL for Fine-Grained Visual Categorization (FGVC). FGVC aims to distinguish objects of visually similar sub categories within a general category. The small inter-class, but large intra-class variations within the dataset makes it a challenging task. The limited availability of annotated labels for such a fine-grained data encourages the need for SSL, where additional supervision can boost learning without the cost of extra annotations. Our baseline achieves $86.36\%$ top-1 classification accuracy on CUB-200-2011 dataset by utilizing random crop augmentation during training and center crop augmentation during testing. In this work, we explore the usefulness of various pretext tasks, specifically, rotation, pretext invariant representation learning (PIRL), and deconstruction and construction learning (DCL) for FGVC. Rotation as an auxiliary task promotes the model to learn global features, and diverts it from focusing on the subtle details. PIRL that uses jigsaw patches attempts to focus on discriminative local regions, but struggles to accurately localize them. DCL helps in learning local discriminating features and outperforms the baseline by achieving $87.41\%$ top-1 accuracy. The deconstruction learning forces the model to focus on local object parts, while reconstruction learning helps in learning the correlation between the parts. We perform extensive experiments to reason our findings. Our code is available at https://github.com/mmaaz60/ssl_for_fgvc.

PDF Abstract

CUB-200-2011

CUB-200-2011