Self-Challenging Improves Cross-Domain Generalization

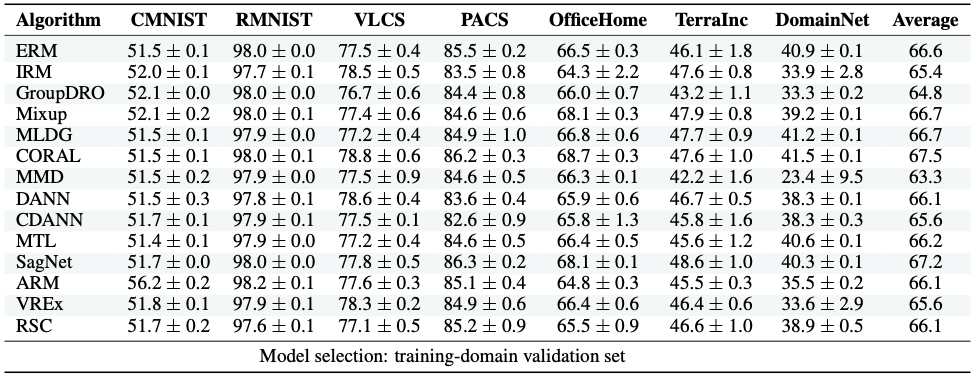

Convolutional Neural Networks (CNN) conduct image classification by activating dominant features that correlated with labels. When the training and testing data are under similar distributions, their dominant features are similar, which usually facilitates decent performance on the testing data. The performance is nonetheless unmet when tested on samples from different distributions, leading to the challenges in cross-domain image classification. We introduce a simple training heuristic, Representation Self-Challenging (RSC), that significantly improves the generalization of CNN to the out-of-domain data. RSC iteratively challenges (discards) the dominant features activated on the training data, and forces the network to activate remaining features that correlates with labels. This process appears to activate feature representations applicable to out-of-domain data without prior knowledge of new domain and without learning extra network parameters. We present theoretical properties and conditions of RSC for improving cross-domain generalization. The experiments endorse the simple, effective and architecture-agnostic nature of our RSC method.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

Office-Home

Office-Home

PACS

PACS

ImageNet-Sketch

ImageNet-Sketch