SegViT: Semantic Segmentation with Plain Vision Transformers

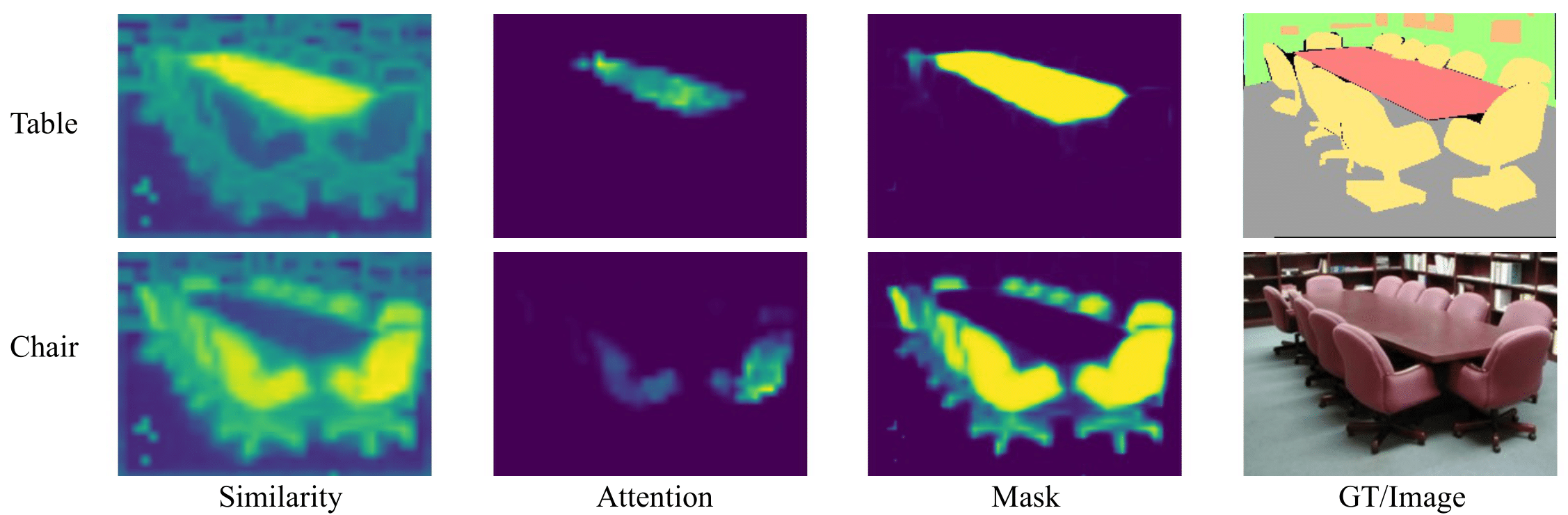

We explore the capability of plain Vision Transformers (ViTs) for semantic segmentation and propose the SegVit. Previous ViT-based segmentation networks usually learn a pixel-level representation from the output of the ViT. Differently, we make use of the fundamental component -- attention mechanism, to generate masks for semantic segmentation. Specifically, we propose the Attention-to-Mask (ATM) module, in which the similarity maps between a set of learnable class tokens and the spatial feature maps are transferred to the segmentation masks. Experiments show that our proposed SegVit using the ATM module outperforms its counterparts using the plain ViT backbone on the ADE20K dataset and achieves new state-of-the-art performance on COCO-Stuff-10K and PASCAL-Context datasets. Furthermore, to reduce the computational cost of the ViT backbone, we propose query-based down-sampling (QD) and query-based up-sampling (QU) to build a Shrunk structure. With the proposed Shrunk structure, the model can save up to $40\%$ computations while maintaining competitive performance.

PDF Abstract

ADE20K

ADE20K

PASCAL Context

PASCAL Context

COCO-Stuff

COCO-Stuff