Seeing Under the Cover: A Physics Guided Learning Approach for In-Bed Pose Estimation

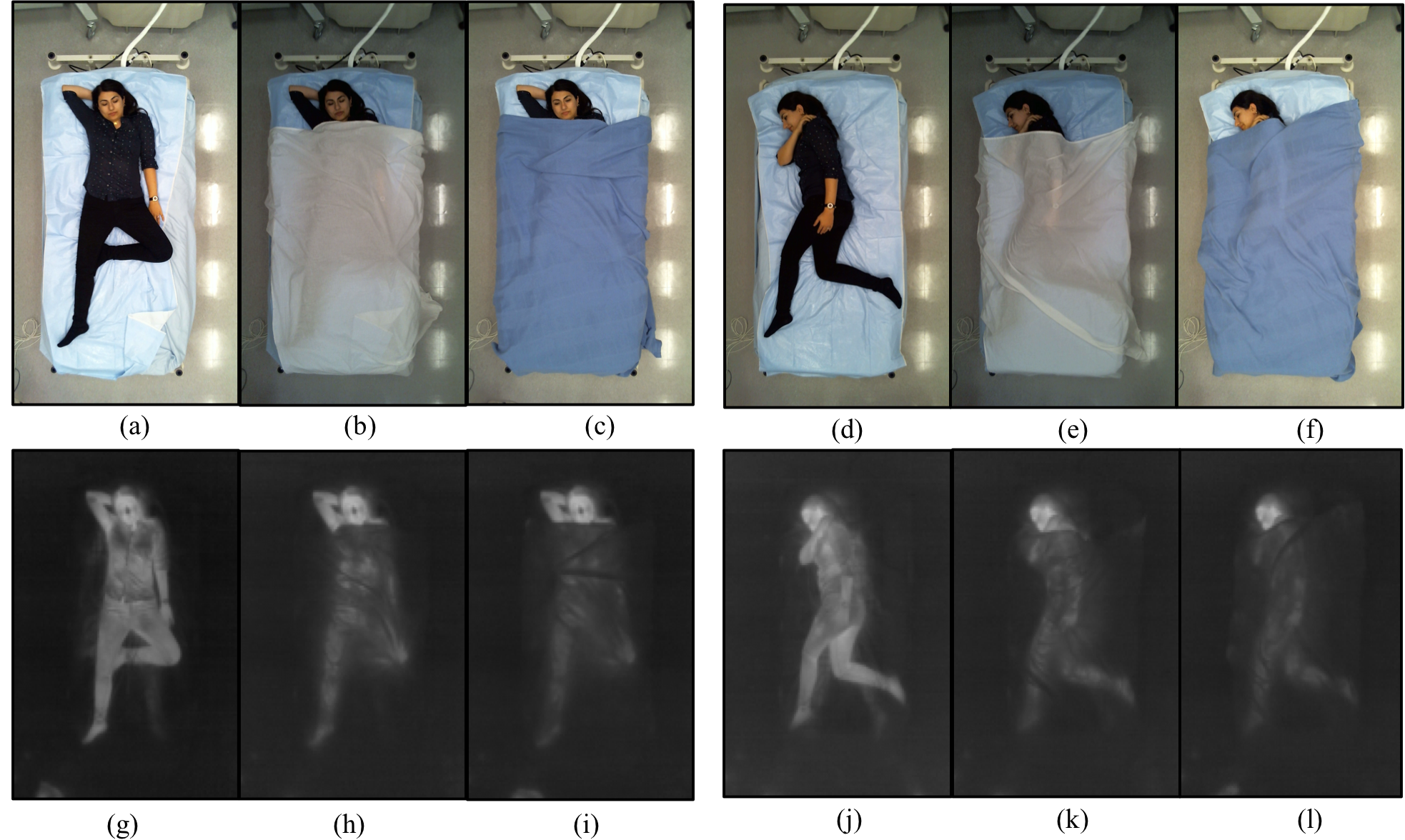

Human in-bed pose estimation has huge practical values in medical and healthcare applications yet still mainly relies on expensive pressure mapping (PM) solutions. In this paper, we introduce our novel physics inspired vision-based approach that addresses the challenging issues associated with the in-bed pose estimation problem including monitoring a fully covered person in complete darkness. We reformulated this problem using our proposed Under the Cover Imaging via Thermal Diffusion (UCITD) method to capture the high resolution pose information of the body even when it is fully covered by using a long wavelength IR technique. We proposed a physical hyperparameter concept through which we achieved high quality groundtruth pose labels in different modalities. A fully annotated in-bed pose dataset called Simultaneously-collected multimodal Lying Pose (SLP) is also formed/released with the same order of magnitude as most existing large-scale human pose datasets to support complex models' training and evaluation. A network trained from scratch on it and tested on two diverse settings, one in a living room and the other in a hospital room showed pose estimation performance of 99.5% and 95.7% in PCK0.2 standard, respectively. Moreover, in a multi-factor comparison with a state-of-the art in-bed pose monitoring solution based on PM, our solution showed significant superiority in all practical aspects by being 60 times cheaper, 300 times smaller, while having higher pose recognition granularity and accuracy.

PDF Abstract

LSP

LSP