Scaling Equilibrium Propagation to Deep ConvNets by Drastically Reducing its Gradient Estimator Bias

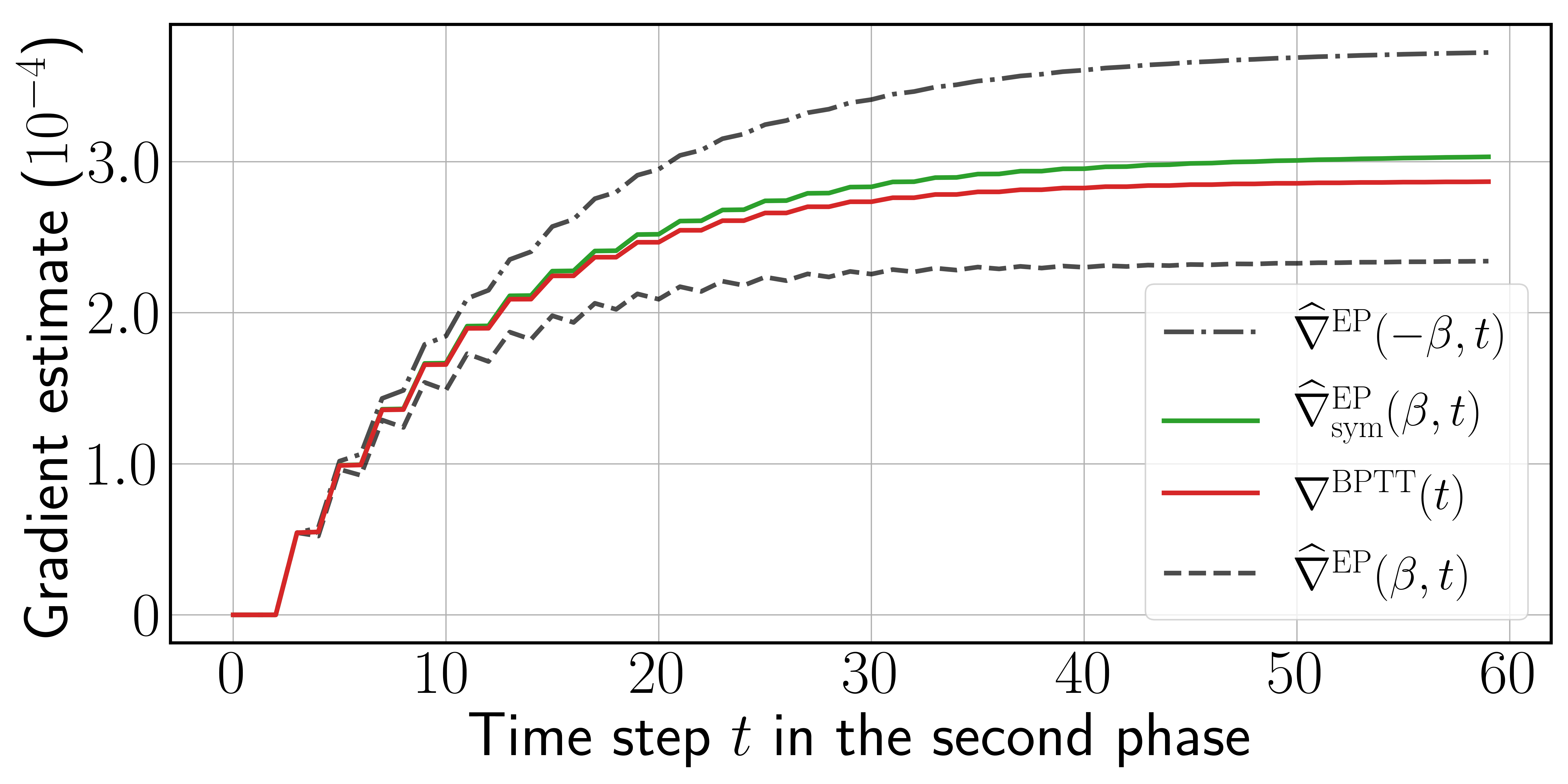

Equilibrium Propagation (EP) is a biologically-inspired algorithm for convergent RNNs with a local learning rule that comes with strong theoretical guarantees. The parameter updates of the neural network during the credit assignment phase have been shown mathematically to approach the gradients provided by Backpropagation Through Time (BPTT) when the network is infinitesimally nudged toward its target. In practice, however, training a network with the gradient estimates provided by EP does not scale to visual tasks harder than MNIST. In this work, we show that a bias in the gradient estimate of EP, inherent in the use of finite nudging, is responsible for this phenomenon and that cancelling it allows training deep ConvNets by EP. We show that this bias can be greatly reduced by using symmetric nudging (a positive nudging and a negative one). We also generalize previous EP equations to the case of cross-entropy loss (by opposition to squared error). As a result of these advances, we are able to achieve a test error of 11.7% on CIFAR-10 by EP, which approaches the one achieved by BPTT and provides a major improvement with respect to the standard EP approach with same-sign nudging that gives 86% test error. We also apply these techniques to train an architecture with asymmetric forward and backward connections, yielding a 13.2% test error. These results highlight EP as a compelling biologically-plausible approach to compute error gradients in deep neural networks.

PDF Abstract

CIFAR-10

CIFAR-10