Sampling is Matter: Point-guided 3D Human Mesh Reconstruction

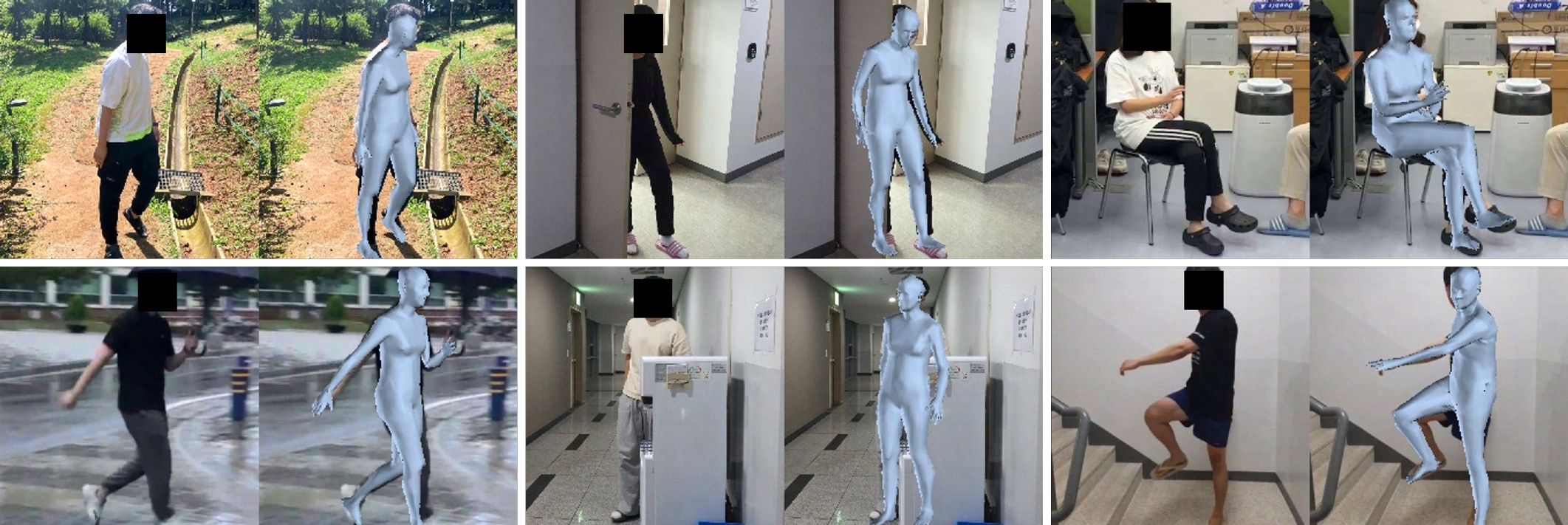

This paper presents a simple yet powerful method for 3D human mesh reconstruction from a single RGB image. Most recently, the non-local interactions of the whole mesh vertices have been effectively estimated in the transformer while the relationship between body parts also has begun to be handled via the graph model. Even though those approaches have shown the remarkable progress in 3D human mesh reconstruction, it is still difficult to directly infer the relationship between features, which are encoded from the 2D input image, and 3D coordinates of each vertex. To resolve this problem, we propose to design a simple feature sampling scheme. The key idea is to sample features in the embedded space by following the guide of points, which are estimated as projection results of 3D mesh vertices (i.e., ground truth). This helps the model to concentrate more on vertex-relevant features in the 2D space, thus leading to the reconstruction of the natural human pose. Furthermore, we apply progressive attention masking to precisely estimate local interactions between vertices even under severe occlusions. Experimental results on benchmark datasets show that the proposed method efficiently improves the performance of 3D human mesh reconstruction. The code and model are publicly available at: https://github.com/DCVL-3D/PointHMR_release.

PDF Abstract CVPR 2023 PDF CVPR 2023 AbstractResults from the Paper

Ranked #19 on

Monocular 3D Human Pose Estimation

on Human3.6M

(using extra training data)

Ranked #19 on

Monocular 3D Human Pose Estimation

on Human3.6M

(using extra training data)

Human3.6M

Human3.6M

3DPW

3DPW

FreiHAND

FreiHAND