S3Net: A Single Stream Structure for Depth Guided Image Relighting

Depth guided any-to-any image relighting aims to generate a relit image from the original image and corresponding depth maps to match the illumination setting of the given guided image and its depth map. To the best of our knowledge, this task is a new challenge that has not been addressed in the previous literature. To address this issue, we propose a deep learning-based neural Single Stream Structure network called S3Net for depth guided image relighting. This network is an encoder-decoder model. We concatenate all images and corresponding depth maps as the input and feed them into the model. The decoder part contains the attention module and the enhanced module to focus on the relighting-related regions in the guided images. Experiments performed on challenging benchmark show that the proposed model achieves the 3 rd highest SSIM in the NTIRE 2021 Depth Guided Any-to-any Relighting Challenge.

PDF Abstract

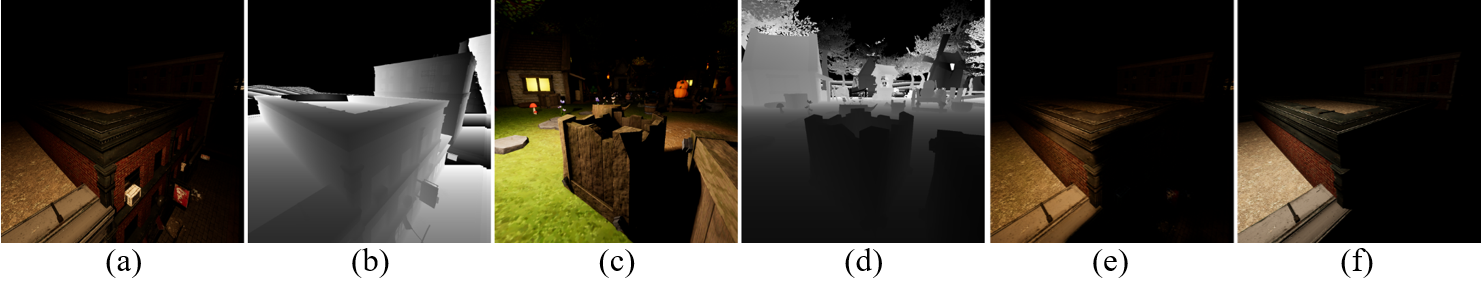

VIDIT

VIDIT