Enhancing Robust Representation in Adversarial Training: Alignment and Exclusion Criteria

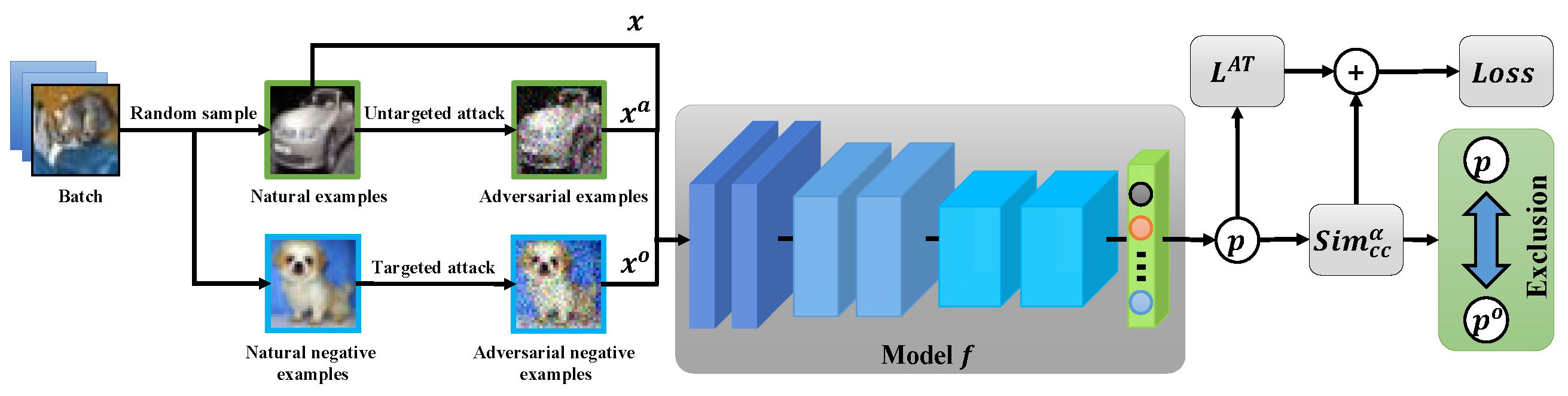

Deep neural networks are vulnerable to adversarial noise. Adversarial Training (AT) has been demonstrated to be the most effective defense strategy to protect neural networks from being fooled. However, we find AT omits to learning robust features, resulting in poor performance of adversarial robustness. To address this issue, we highlight two criteria of robust representation: (1) Exclusion: \emph{the feature of examples keeps away from that of other classes}; (2) Alignment: \emph{the feature of natural and corresponding adversarial examples is close to each other}. These motivate us to propose a generic framework of AT to gain robust representation, by the asymmetric negative contrast and reverse attention. Specifically, we design an asymmetric negative contrast based on predicted probabilities, to push away examples of different classes in the feature space. Moreover, we propose to weight feature by parameters of the linear classifier as the reverse attention, to obtain class-aware feature and pull close the feature of the same class. Empirical evaluations on three benchmark datasets show our methods greatly advance the robustness of AT and achieve state-of-the-art performance.

PDF AbstractCode

Results from the Paper

Ranked #1 on

Adversarial Attack

on CIFAR-10

(Attack: AutoAttack metric)

Ranked #1 on

Adversarial Attack

on CIFAR-10

(Attack: AutoAttack metric)

CIFAR-10

CIFAR-10

ImageNet

ImageNet

CIFAR-100

CIFAR-100