Richly Activated Graph Convolutional Network for Robust Skeleton-based Action Recognition

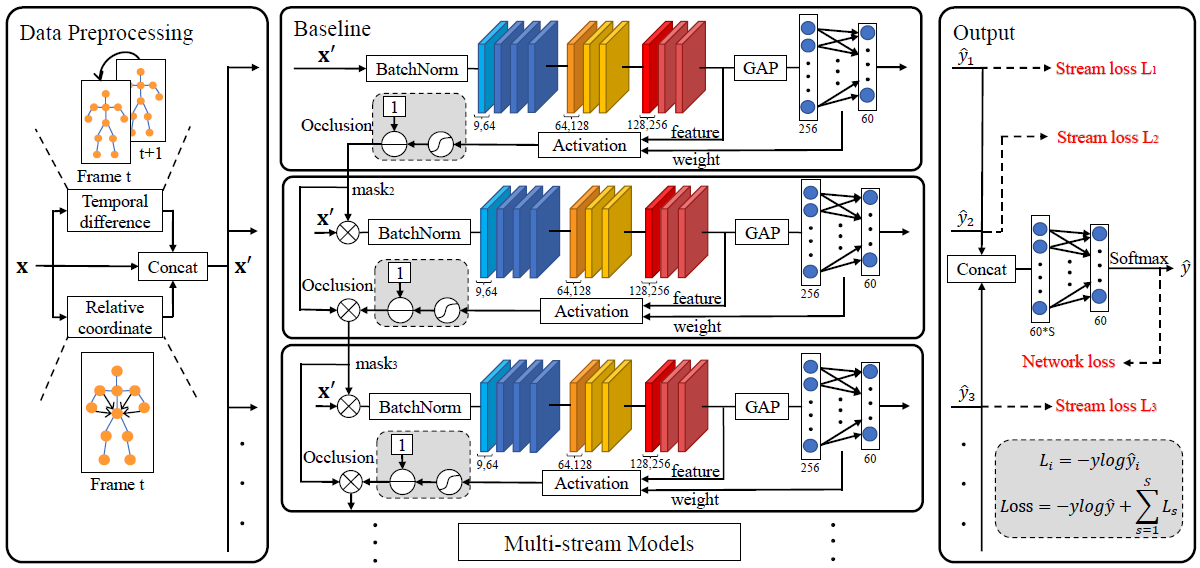

Current methods for skeleton-based human action recognition usually work with complete skeletons. However, in real scenarios, it is inevitable to capture incomplete or noisy skeletons, which could significantly deteriorate the performance of current methods when some informative joints are occluded or disturbed. To improve the robustness of action recognition models, a multi-stream graph convolutional network (GCN) is proposed to explore sufficient discriminative features spreading over all skeleton joints, so that the distributed redundant representation reduces the sensitivity of the action models to non-standard skeletons. Concretely, the backbone GCN is extended by a series of ordered streams which is responsible for learning discriminative features from the joints less activated by preceding streams. Here, the activation degrees of skeleton joints of each GCN stream are measured by the class activation maps (CAM), and only the information from the unactivated joints will be passed to the next stream, by which rich features over all active joints are obtained. Thus, the proposed method is termed richly activated GCN (RA-GCN). Compared to the state-of-the-art (SOTA) methods, the RA-GCN achieves comparable performance on the standard NTU RGB+D 60 and 120 datasets. More crucially, on the synthetic occlusion and jittering datasets, the performance deterioration due to the occluded and disturbed joints can be significantly alleviated by utilizing the proposed RA-GCN.

PDF Abstract

NTU RGB+D

NTU RGB+D

NTU RGB+D 120

NTU RGB+D 120