Relational Sentence Embedding for Flexible Semantic Matching

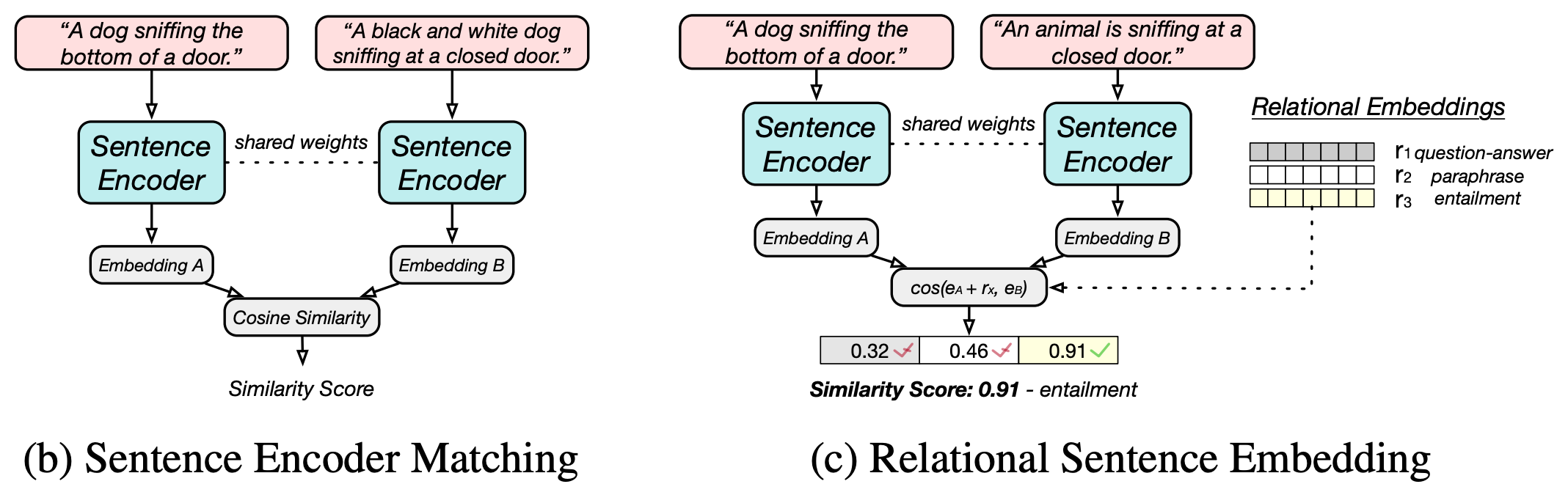

We present Relational Sentence Embedding (RSE), a new paradigm to further discover the potential of sentence embeddings. Prior work mainly models the similarity between sentences based on their embedding distance. Because of the complex semantic meanings conveyed, sentence pairs can have various relation types, including but not limited to entailment, paraphrasing, and question-answer. It poses challenges to existing embedding methods to capture such relational information. We handle the problem by learning associated relational embeddings. Specifically, a relation-wise translation operation is applied to the source sentence to infer the corresponding target sentence with a pre-trained Siamese-based encoder. The fine-grained relational similarity scores can be computed from learned embeddings. We benchmark our method on 19 datasets covering a wide range of tasks, including semantic textual similarity, transfer, and domain-specific tasks. Experimental results show that our method is effective and flexible in modeling sentence relations and outperforms a series of state-of-the-art sentence embedding methods. https://github.com/BinWang28/RSE

PDF Abstract

GLUE

GLUE

SST

SST

MultiNLI

MultiNLI

SNLI

SNLI

QNLI

QNLI

MRPC

MRPC

SICK

SICK

MPQA Opinion Corpus

MPQA Opinion Corpus

SciDocs

SciDocs