RefSAM: Efficiently Adapting Segmenting Anything Model for Referring Video Object Segmentation

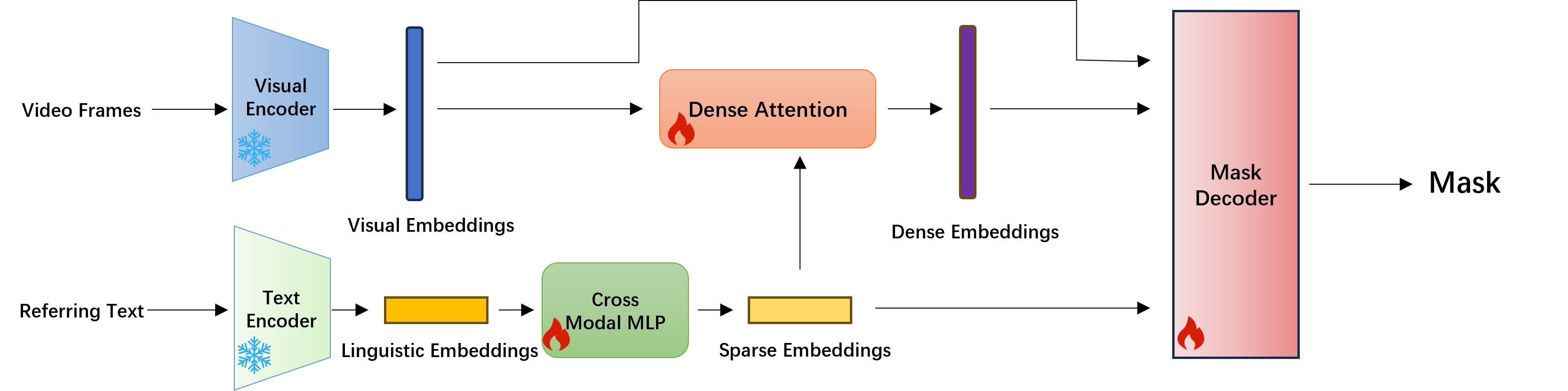

The Segment Anything Model (SAM) has gained significant attention for its impressive performance in image segmentation. However, it lacks proficiency in referring video object segmentation (RVOS) due to the need for precise user-interactive prompts and a limited understanding of different modalities, such as language and vision. This paper presents the RefSAM model, which explores the potential of SAM for RVOS by incorporating multi-view information from diverse modalities and successive frames at different timestamps in an online manner. Our proposed approach adapts the original SAM model to enhance cross-modality learning by employing a lightweight Cross-Modal MLP that projects the text embedding of the referring expression into sparse and dense embeddings, serving as user-interactive prompts. Additionally, we have introduced the hierarchical dense attention module to fuse hierarchical visual semantic information with sparse embeddings in order to obtain fine-grained dense embeddings, and an implicit tracking module to generate a track token and provide historical information for the mask decoder. Furthermore, we employ a parameter-efficient tuning strategy to effectively align and fuse the language and vision features. Through comprehensive ablation studies, we demonstrate the practical and effective design choices of our model. Extensive experiments conducted on Ref-Youtu-VOS, Ref-DAVIS17, and three referring image segmentation datasets validate the superiority and effectiveness of our RefSAM model over existing methods. The code and models will be made publicly at \href{https://github.com/LancasterLi/RefSAM}{github.com/LancasterLi/RefSAM}.

PDF Abstract