Recurrent Space-time Graph Neural Networks

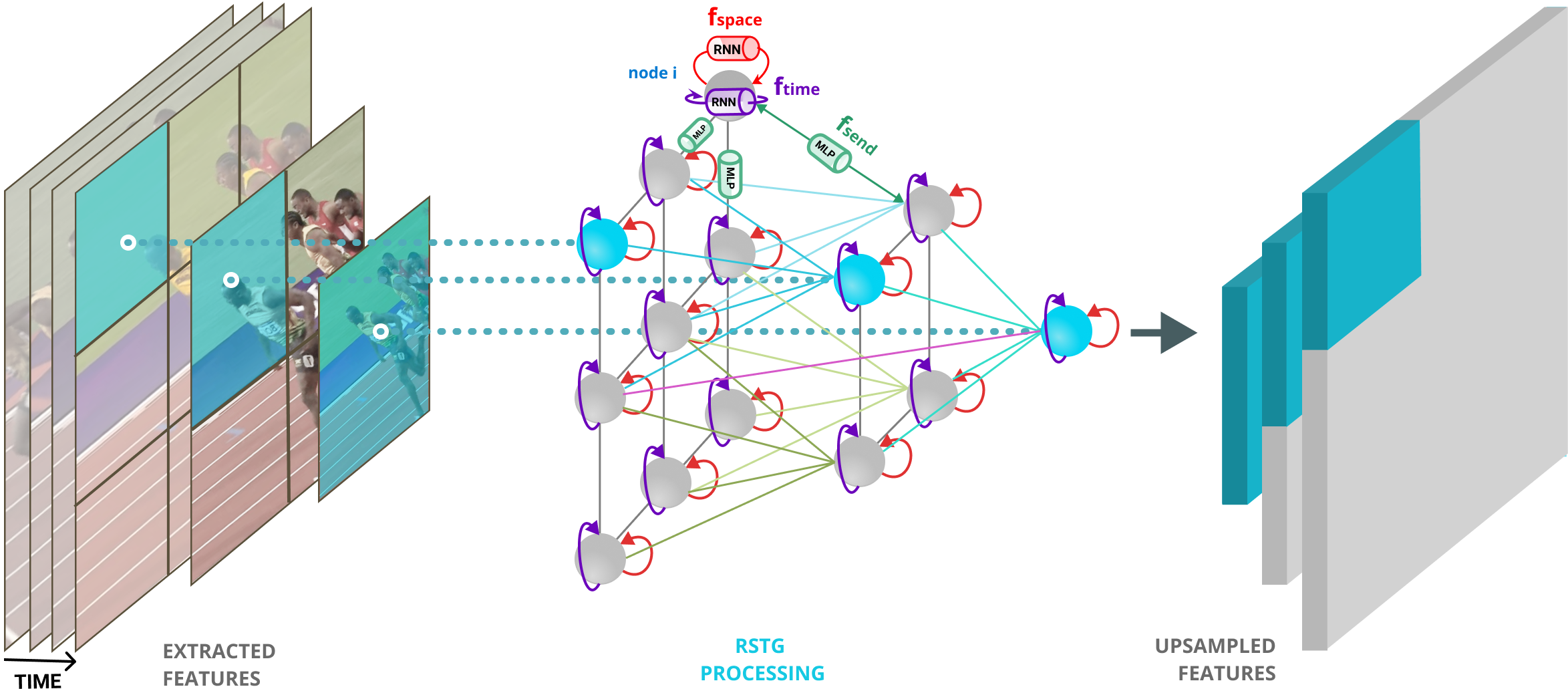

Learning in the space-time domain remains a very challenging problem in machine learning and computer vision. Current computational models for understanding spatio-temporal visual data are heavily rooted in the classical single-image based paradigm. It is not yet well understood how to integrate information in space and time into a single, general model. We propose a neural graph model, recurrent in space and time, suitable for capturing both the local appearance and the complex higher-level interactions of different entities and objects within the changing world scene. Nodes and edges in our graph have dedicated neural networks for processing information. Nodes operate over features extracted from local parts in space and time and previous memory states. Edges process messages between connected nodes at different locations and spatial scales or between past and present time. Messages are passed iteratively in order to transmit information globally and establish long range interactions. Our model is general and could learn to recognize a variety of high level spatio-temporal concepts and be applied to different learning tasks. We demonstrate, through extensive experiments and ablation studies, that our model outperforms strong baselines and top published methods on recognizing complex activities in video. Moreover, we obtain state-of-the-art performance on the challenging Something-Something human-object interaction dataset.

PDF Abstract NeurIPS 2019 PDF NeurIPS 2019 AbstractCode

Datasets

Results from the Paper

Ranked #58 on

Action Recognition

on Something-Something V1

(using extra training data)

Ranked #58 on

Action Recognition

on Something-Something V1

(using extra training data)

Something-Something V1

Something-Something V1