Rad-ReStruct: A Novel VQA Benchmark and Method for Structured Radiology Reporting

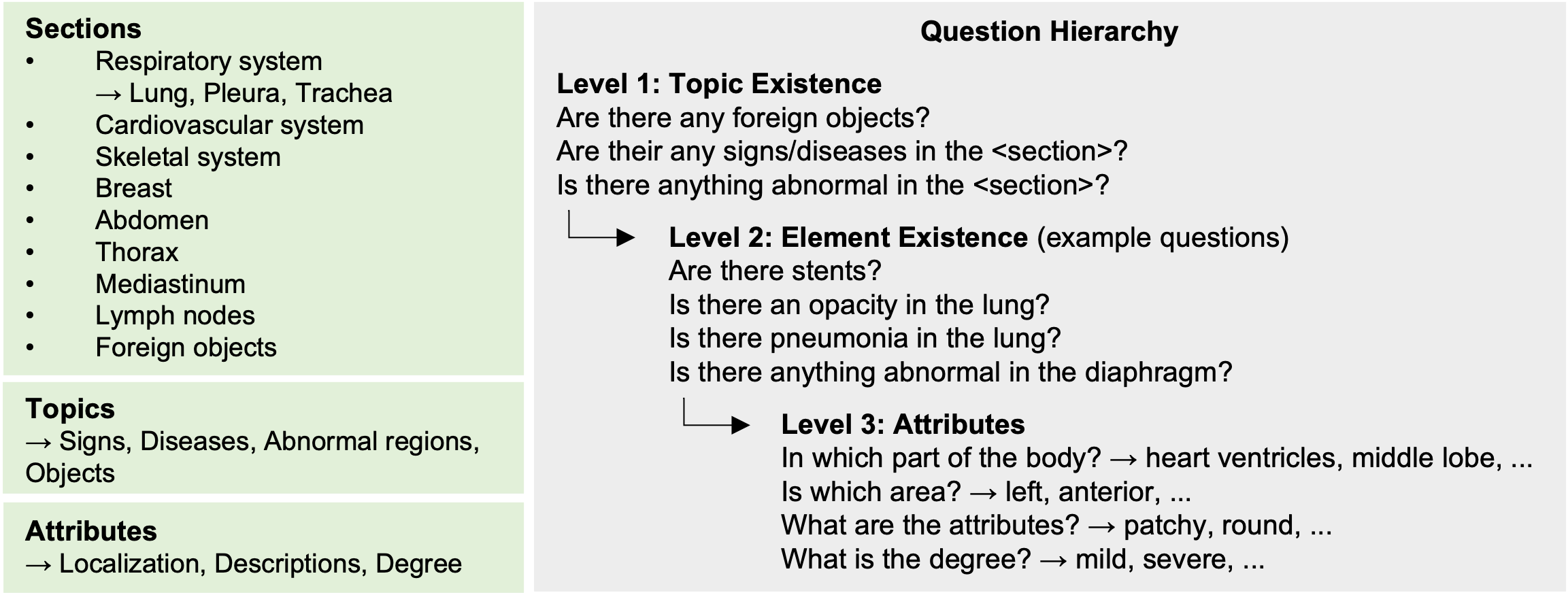

Radiology reporting is a crucial part of the communication between radiologists and other medical professionals, but it can be time-consuming and error-prone. One approach to alleviate this is structured reporting, which saves time and enables a more accurate evaluation than free-text reports. However, there is limited research on automating structured reporting, and no public benchmark is available for evaluating and comparing different methods. To close this gap, we introduce Rad-ReStruct, a new benchmark dataset that provides fine-grained, hierarchically ordered annotations in the form of structured reports for X-Ray images. We model the structured reporting task as hierarchical visual question answering (VQA) and propose hi-VQA, a novel method that considers prior context in the form of previously asked questions and answers for populating a structured radiology report. Our experiments show that hi-VQA achieves competitive performance to the state-of-the-art on the medical VQA benchmark VQARad while performing best among methods without domain-specific vision-language pretraining and provides a strong baseline on Rad-ReStruct. Our work represents a significant step towards the automated population of structured radiology reports and provides a valuable first benchmark for future research in this area. Our dataset and code is available at https://github.com/ChantalMP/Rad-ReStruct.

PDF Abstract

Rad-ReStruct

Rad-ReStruct

VQA-RAD

VQA-RAD