QuaRL: Quantization for Fast and Environmentally Sustainable Reinforcement Learning

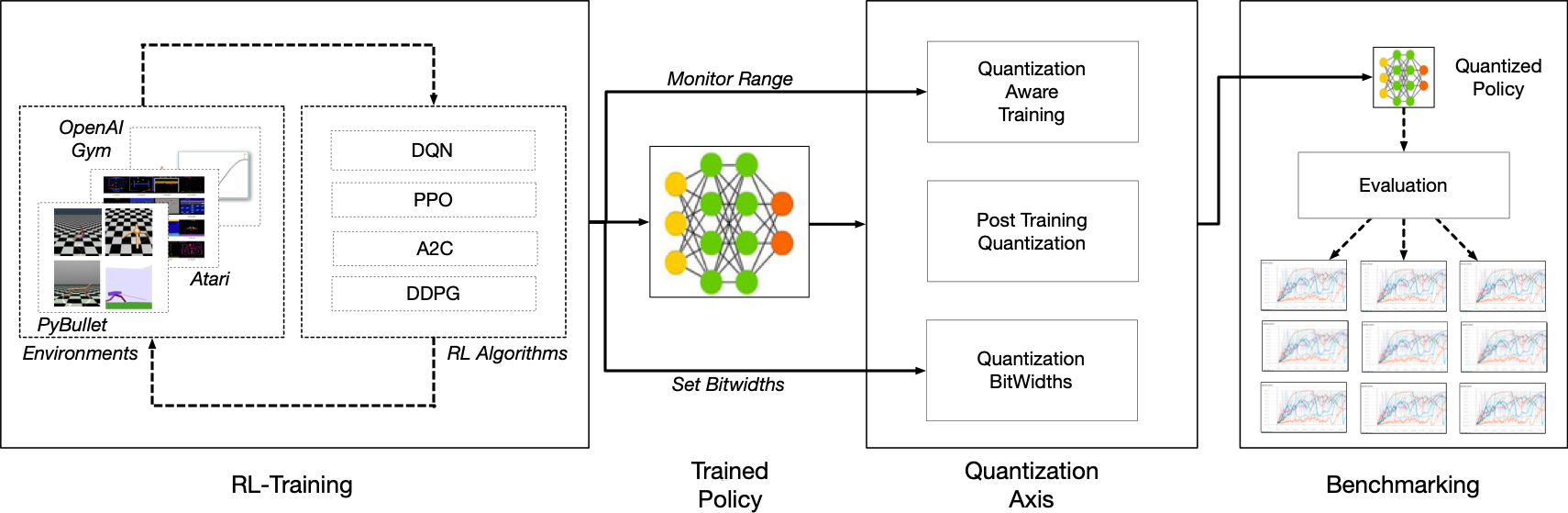

Deep reinforcement learning continues to show tremendous potential in achieving task-level autonomy, however, its computational and energy demands remain prohibitively high. In this paper, we tackle this problem by applying quantization to reinforcement learning. To that end, we introduce a novel Reinforcement Learning (RL) training paradigm, \textit{ActorQ}, to speed up actor-learner distributed RL training. \textit{ActorQ} leverages 8-bit quantized actors to speed up data collection without affecting learning convergence. Our quantized distributed RL training system, \textit{ActorQ}, demonstrates end-to-end speedups \blue{between 1.5 $\times$ and 5.41$\times$}, and faster convergence over full precision training on a range of tasks (Deepmind Control Suite) and different RL algorithms (D4PG, DQN). Furthermore, we compare the carbon emissions (Kgs of CO2) of \textit{ActorQ} versus standard reinforcement learning \blue{algorithms} on various tasks. Across various settings, we show that \textit{ActorQ} enables more environmentally friendly reinforcement learning by achieving \blue{carbon emission improvements between 1.9$\times$ and 3.76$\times$} compared to training RL-agents in full-precision. We believe that this is the first of many future works on enabling computationally energy-efficient and sustainable reinforcement learning. The source code is available here for the public to use: \url{https://github.com/harvard-edge/QuaRL}.

PDF Abstract

OpenAI Gym

OpenAI Gym

DeepMind Control Suite

DeepMind Control Suite