PyHessian: Neural Networks Through the Lens of the Hessian

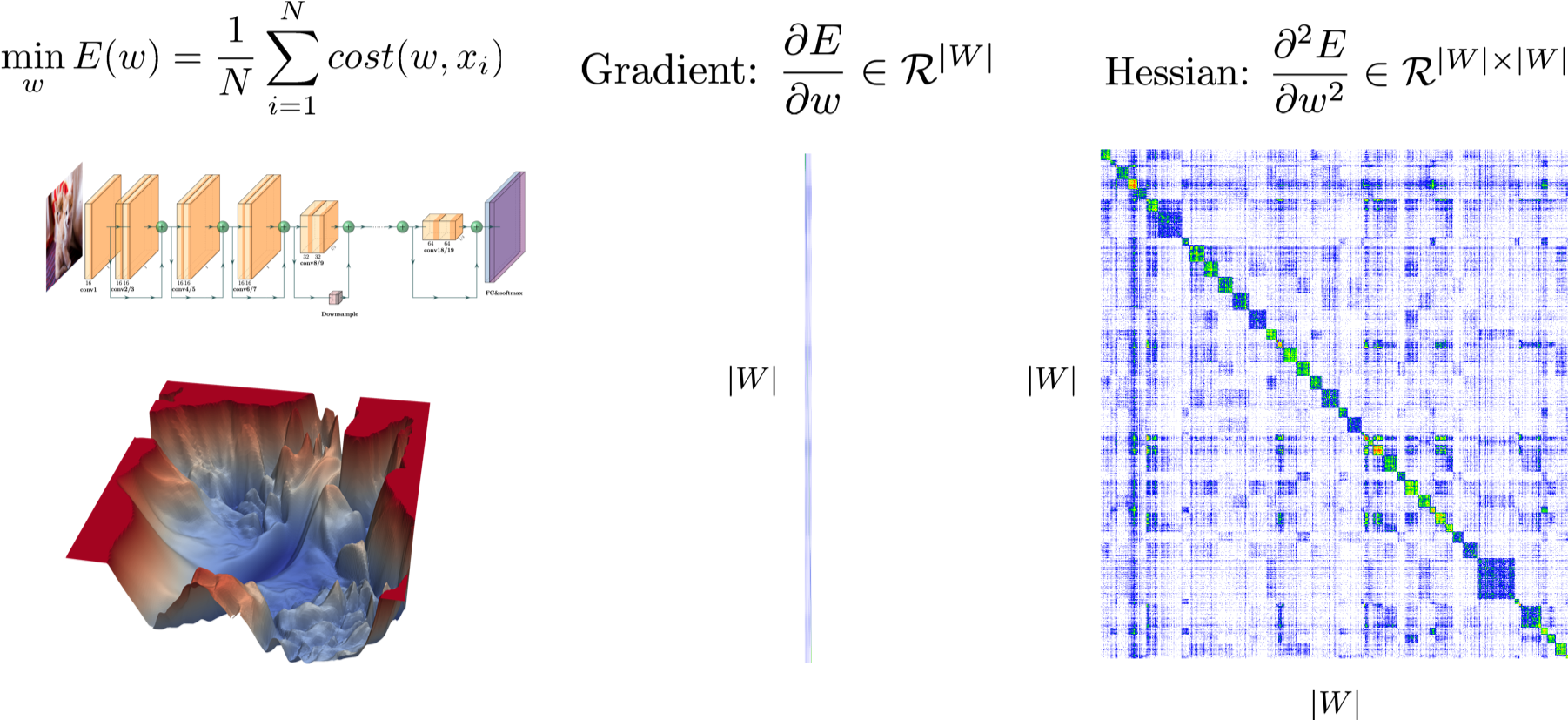

We present PYHESSIAN, a new scalable framework that enables fast computation of Hessian (i.e., second-order derivative) information for deep neural networks. PYHESSIAN enables fast computations of the top Hessian eigenvalues, the Hessian trace, and the full Hessian eigenvalue/spectral density, and it supports distributed-memory execution on cloud/supercomputer systems and is available as open source. This general framework can be used to analyze neural network models, including the topology of the loss landscape (i.e., curvature information) to gain insight into the behavior of different models/optimizers. To illustrate this, we analyze the effect of residual connections and Batch Normalization layers on the trainability of neural networks. One recent claim, based on simpler first-order analysis, is that residual connections and Batch Normalization make the loss landscape smoother, thus making it easier for Stochastic Gradient Descent to converge to a good solution. Our extensive analysis shows new finer-scale insights, demonstrating that, while conventional wisdom is sometimes validated, in other cases it is simply incorrect. In particular, we find that Batch Normalization does not necessarily make the loss landscape smoother, especially for shallower networks.

PDF Abstract