Pushing the Limits of Unsupervised Unit Discovery for SSL Speech Representation

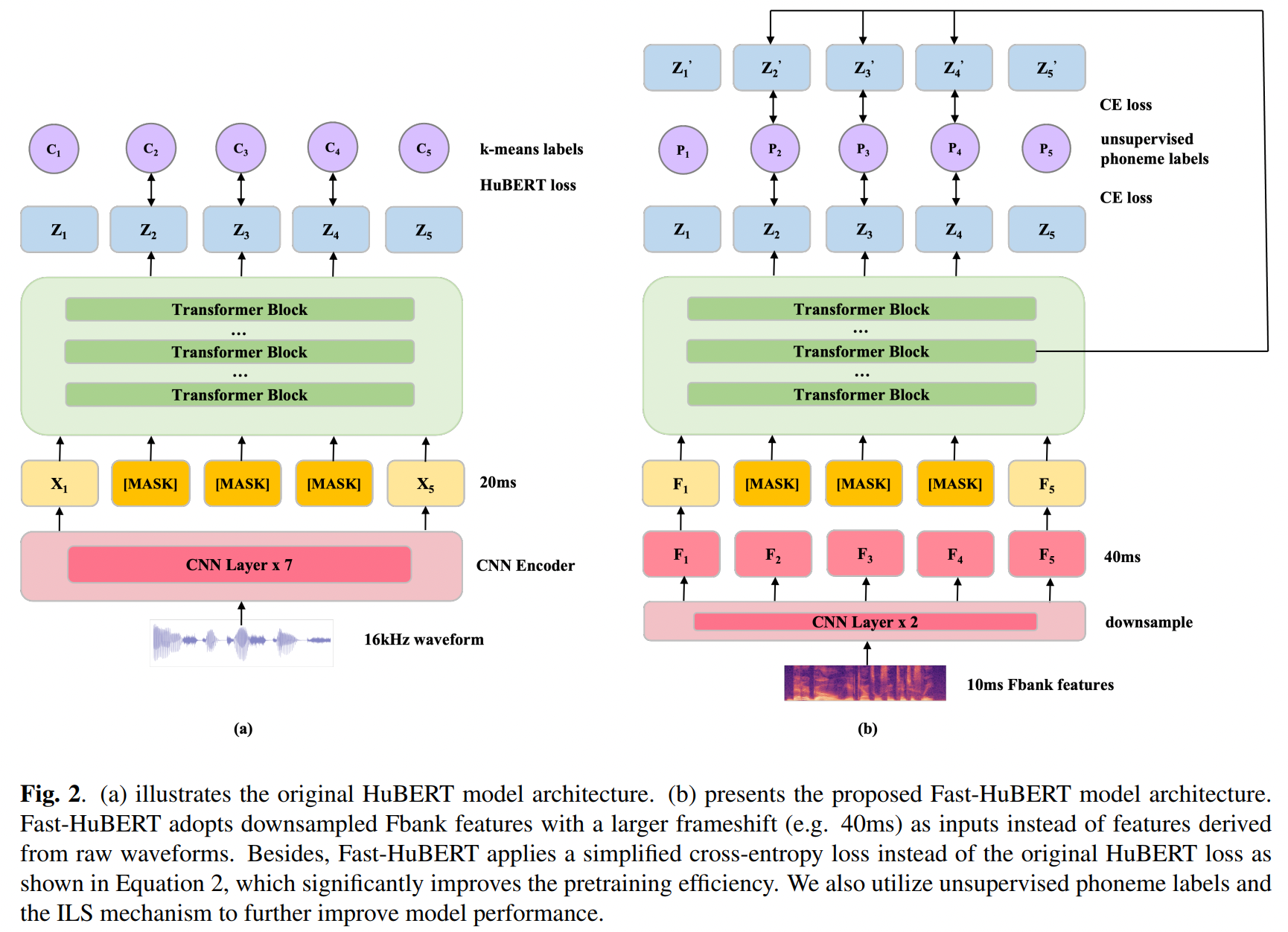

The excellent generalization ability of self-supervised learning (SSL) for speech foundation models has garnered significant attention. HuBERT is a successful example that utilizes offline clustering to convert speech features into discrete units for a masked language modeling pretext task. However, simply clustering features as targets by k-means does not fully inspire the model's performance. In this work, we present an unsupervised method to improve SSL targets. Two models are proposed, MonoBERT and PolyBERT, which leverage context-independent and context-dependent phoneme-based units for pre-training. Our models outperform other SSL models significantly on the LibriSpeech benchmark without the need for iterative re-clustering and re-training. Furthermore, our models equipped with context-dependent units even outperform target-improvement models that use labeled data during pre-training. How we progressively improve the unit discovery process is demonstrated through experiments.

PDF AbstractCode

Datasets

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Automatic Speech Recognition | LibriSpeech test-clean | MonoBERT | Word Error Rate (WER) | 3.2 | # 1 | |

| Automatic Speech Recognition | LibriSpeech test-clean | PolyBERT | Word Error Rate (WER) | 3.1 | # 2 | |

| Automatic Speech Recognition | LibriSpeech test-other | MonoBERT | Word Error Rate (WER) | 7.6 | # 1 | |

| Automatic Speech Recognition | LibriSpeech test-other | PolyBERT | Word Error Rate (WER) | 7.3 | # 2 |

LibriSpeech

LibriSpeech