Provably Robust Boosted Decision Stumps and Trees against Adversarial Attacks

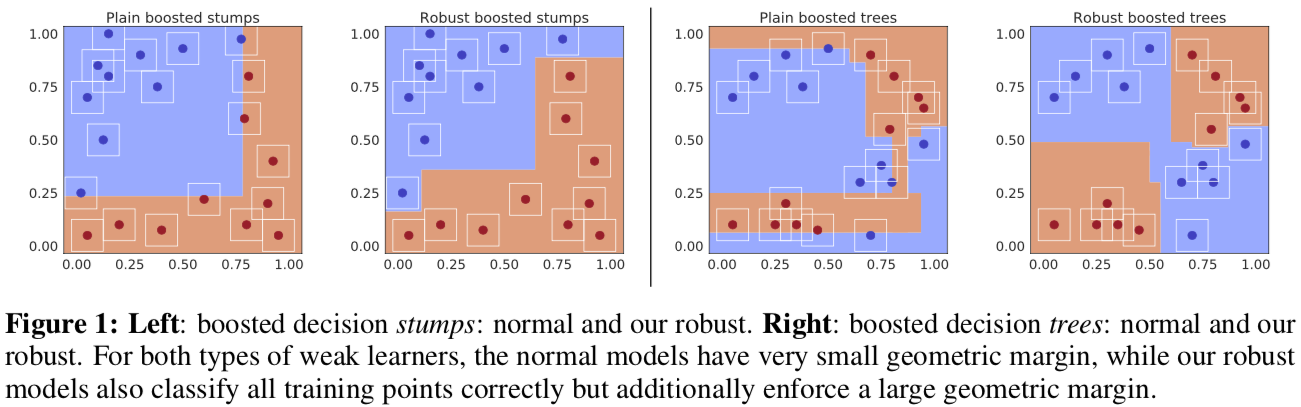

The problem of adversarial robustness has been studied extensively for neural networks. However, for boosted decision trees and decision stumps there are almost no results, even though they are widely used in practice (e.g. XGBoost) due to their accuracy, interpretability, and efficiency. We show in this paper that for boosted decision stumps the \textit{exact} min-max robust loss and test error for an $l_\infty$-attack can be computed in $O(T\log T)$ time per input, where $T$ is the number of decision stumps and the optimal update step of the ensemble can be done in $O(n^2\,T\log T)$, where $n$ is the number of data points. For boosted trees we show how to efficiently calculate and optimize an upper bound on the robust loss, which leads to state-of-the-art robust test error for boosted trees on MNIST (12.5% for $\epsilon_\infty=0.3$), FMNIST (23.2% for $\epsilon_\infty=0.1$), and CIFAR-10 (74.7% for $\epsilon_\infty=8/255$). Moreover, the robust test error rates we achieve are competitive to the ones of provably robust convolutional networks. The code of all our experiments is available at http://github.com/max-andr/provably-robust-boosting

PDF Abstract NeurIPS 2019 PDF NeurIPS 2019 Abstract

CIFAR-10

CIFAR-10