Point-supervised Single-cell Segmentation via Collaborative Knowledge Sharing

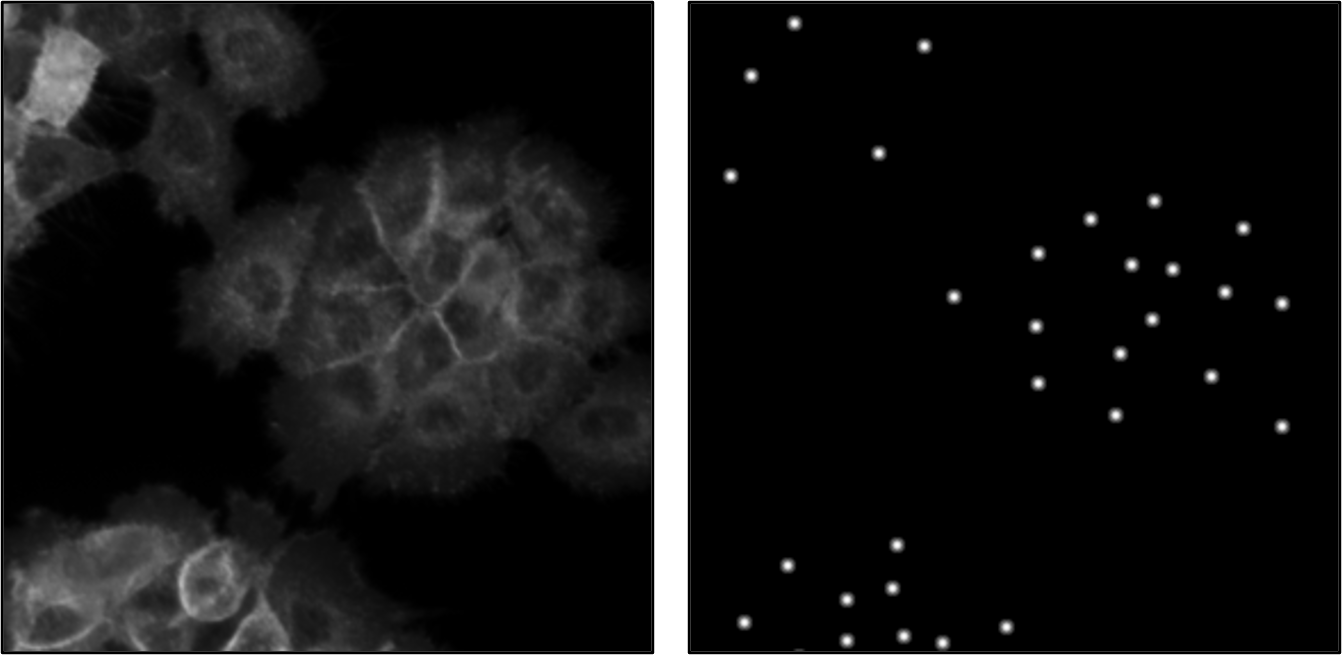

Despite their superior performance, deep-learning methods often suffer from the disadvantage of needing large-scale well-annotated training data. In response, recent literature has seen a proliferation of efforts aimed at reducing the annotation burden. This paper focuses on a weakly-supervised training setting for single-cell segmentation models, where the only available training label is the rough locations of individual cells. The specific problem is of practical interest due to the widely available nuclei counter-stain data in biomedical literature, from which the cell locations can be derived programmatically. Of more general interest is a proposed self-learning method called collaborative knowledge sharing, which is related to but distinct from the more well-known consistency learning methods. This strategy achieves self-learning by sharing knowledge between a principal model and a very light-weight collaborator model. Importantly, the two models are entirely different in their architectures, capacities, and model outputs: In our case, the principal model approaches the segmentation problem from an object-detection perspective, whereas the collaborator model a sematic segmentation perspective. We assessed the effectiveness of this strategy by conducting experiments on LIVECell, a large single-cell segmentation dataset of bright-field images, and on A431 dataset, a fluorescence image dataset in which the location labels are generated automatically from nuclei counter-stain data. Implementing code is available at https://github.com/jiyuuchc/lacss

PDF Abstract

LIVECell

LIVECell