Pinpointing Why Object Recognition Performance Degrades Across Income Levels and Geographies

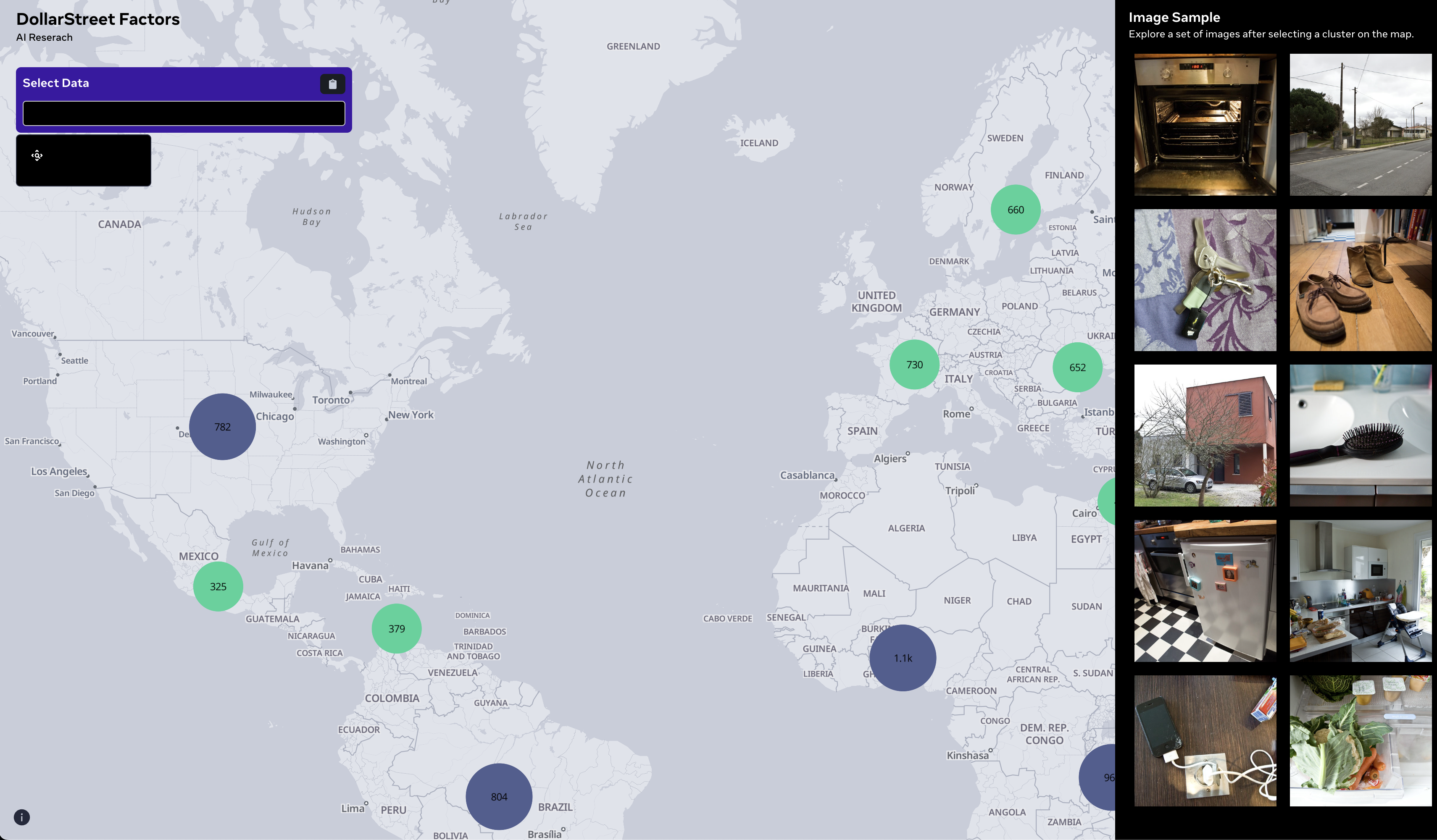

Despite impressive advances in object-recognition, deep learning systems' performance degrades significantly across geographies and lower income levels raising pressing concerns of inequity. Addressing such performance gaps remains a challenge, as little is understood about why performance degrades across incomes or geographies. We take a step in this direction by annotating images from Dollar Street, a popular benchmark of geographically and economically diverse images, labeling each image with factors such as color, shape, and background. These annotations unlock a new granular view into how objects differ across incomes and regions. We then use these object differences to pinpoint model vulnerabilities across incomes and regions. We study a range of modern vision models, finding that performance disparities are most associated with differences in texture, occlusion, and images with darker lighting. We illustrate how insights from our factor labels can surface mitigations to improve models' performance disparities. As an example, we show that mitigating a model's vulnerability to texture can improve performance on the lower income level. We release all the factor annotations along with an interactive dashboard to facilitate research into more equitable vision systems.

PDF Abstract