PepLand: a large-scale pre-trained peptide representation model for a comprehensive landscape of both canonical and non-canonical amino acids

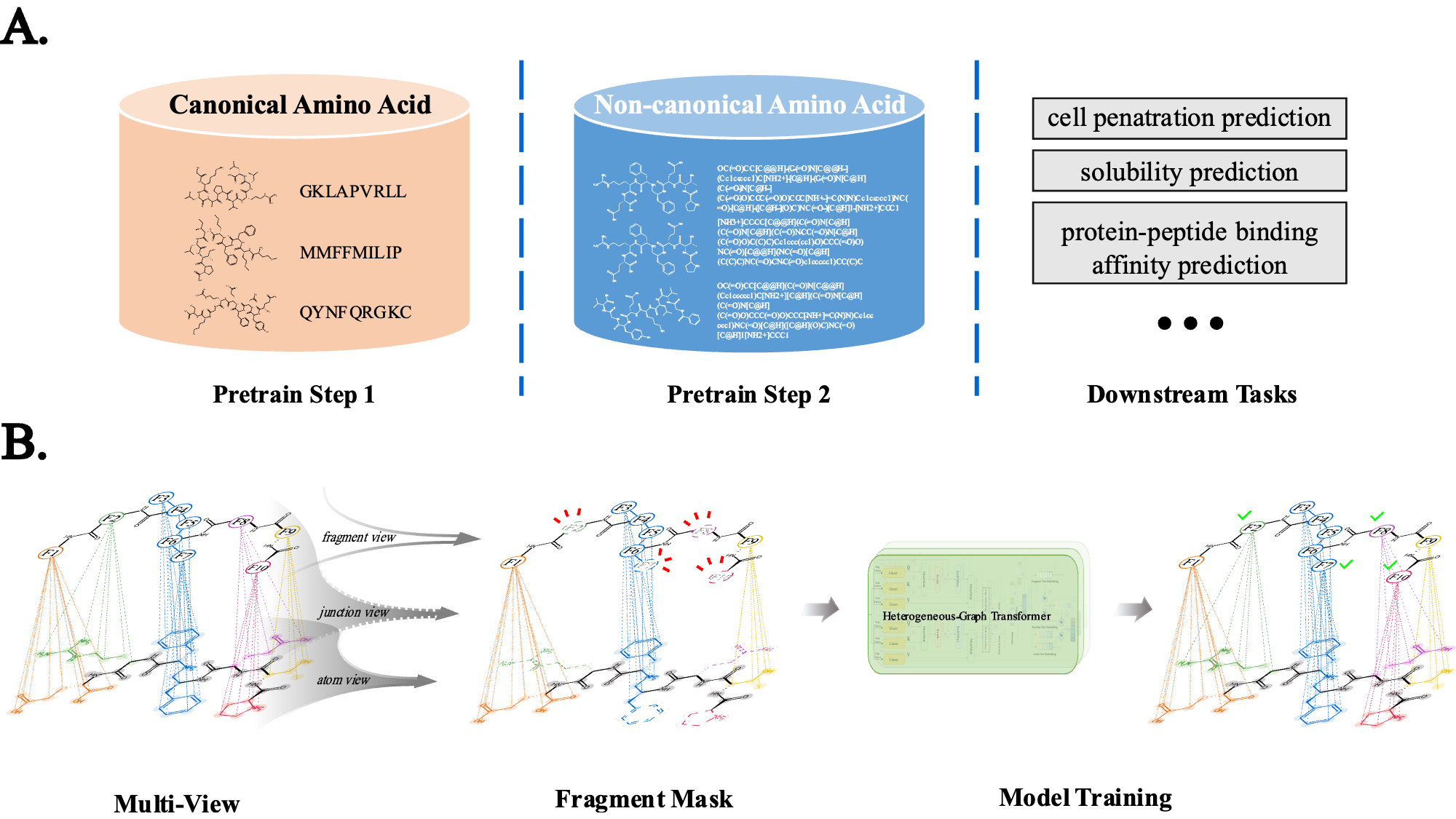

In recent years, the scientific community has become increasingly interested on peptides with non-canonical amino acids due to their superior stability and resistance to proteolytic degradation. These peptides present promising modifications to biological, pharmacological, and physiochemical attributes in both endogenous and engineered peptides. Notwithstanding their considerable advantages, the scientific community exhibits a conspicuous absence of an effective pre-trained model adept at distilling feature representations from such complex peptide sequences. We herein propose PepLand, a novel pre-training architecture for representation and property analysis of peptides spanning both canonical and non-canonical amino acids. In essence, PepLand leverages a comprehensive multi-view heterogeneous graph neural network tailored to unveil the subtle structural representations of peptides. Empirical validations underscore PepLand's effectiveness across an array of peptide property predictions, encompassing protein-protein interactions, permeability, solubility, and synthesizability. The rigorous evaluation confirms PepLand's unparalleled capability in capturing salient synthetic peptide features, thereby laying a robust foundation for transformative advances in peptide-centric research domains. We have made all the source code utilized in this study publicly accessible via GitHub at https://github.com/zhangruochi/pepland

PDF Abstract