Part-aware Prototype Network for Few-shot Semantic Segmentation

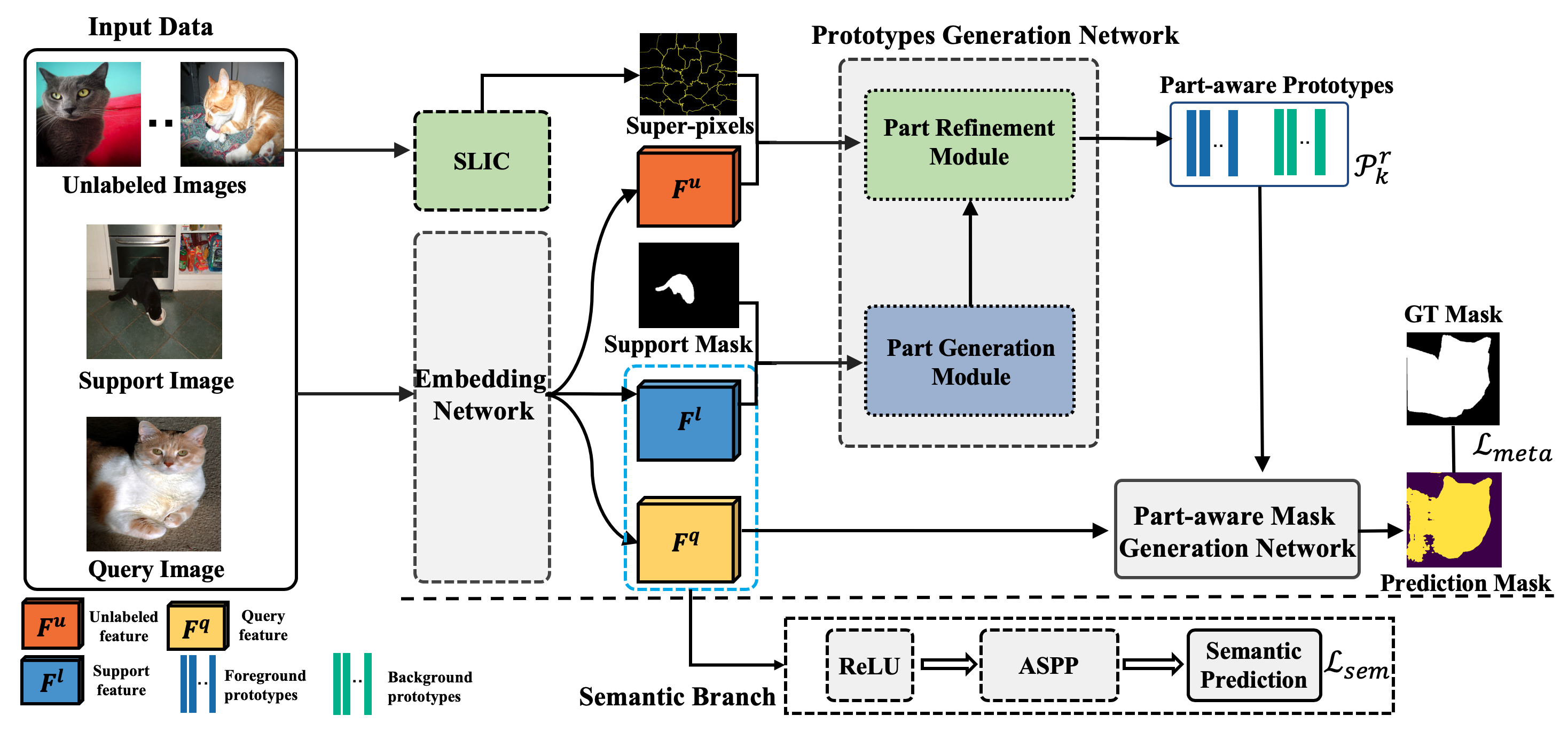

Few-shot semantic segmentation aims to learn to segment new object classes with only a few annotated examples, which has a wide range of real-world applications. Most existing methods either focus on the restrictive setting of one-way few-shot segmentation or suffer from incomplete coverage of object regions. In this paper, we propose a novel few-shot semantic segmentation framework based on the prototype representation. Our key idea is to decompose the holistic class representation into a set of part-aware prototypes, capable of capturing diverse and fine-grained object features. In addition, we propose to leverage unlabeled data to enrich our part-aware prototypes, resulting in better modeling of intra-class variations of semantic objects. We develop a novel graph neural network model to generate and enhance the proposed part-aware prototypes based on labeled and unlabeled images. Extensive experimental evaluations on two benchmarks show that our method outperforms the prior art with a sizable margin.

PDF Abstract ECCV 2020 PDF ECCV 2020 Abstract

MS COCO

MS COCO

PASCAL-5i

PASCAL-5i