OpenTag: Open Attribute Value Extraction from Product Profiles [Deep Learning, Active Learning, Named Entity Recognition]

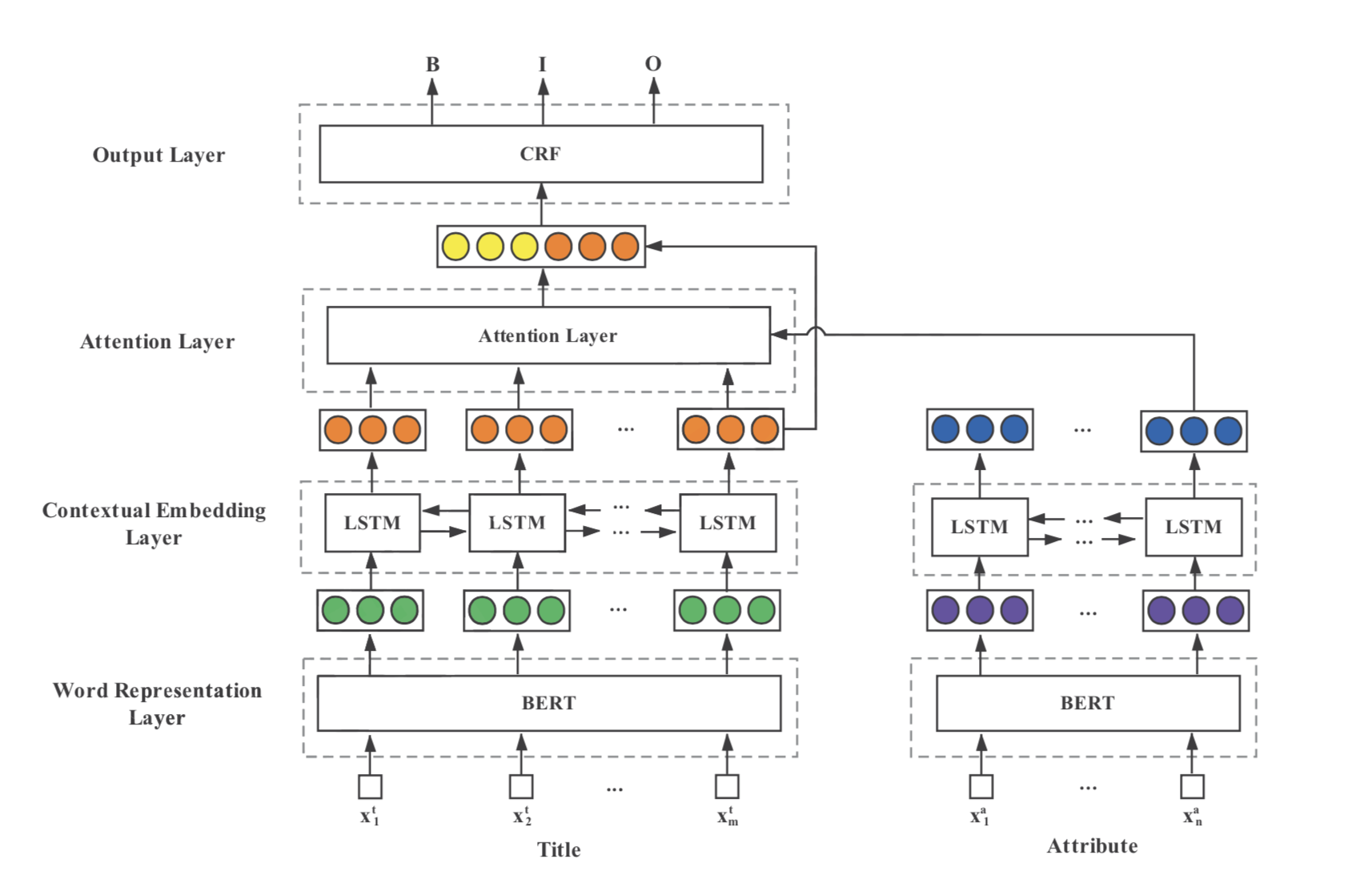

Extraction of missing attribute values is to find values describing an attribute of interest from a free text input. Most past related work on extraction of missing attribute values work with a closed world assumption with the possible set of values known beforehand, or use dictionaries of values and hand-crafted features. How can we discover new attribute values that we have never seen before? Can we do this with limited human annotation or supervision? We study this problem in the context of product catalogs that often have missing values for many attributes of interest. In this work, we leverage product profile information such as titles and descriptions to discover missing values of product attributes. We develop a novel deep tagging model OpenTag for this extraction problem with the following contributions: (1) we formalize the problem as a sequence tagging task, and propose a joint model exploiting recurrent neural networks (specifically, bidirectional LSTM) to capture context and semantics, and Conditional Random Fields (CRF) to enforce tagging consistency, (2) we develop a novel attention mechanism to provide interpretable explanation for our model's decisions, (3) we propose a novel sampling strategy exploring active learning to reduce the burden of human annotation. OpenTag does not use any dictionary or hand-crafted features as in prior works. Extensive experiments in real-life datasets in different domains show that OpenTag with our active learning strategy discovers new attribute values from as few as 150 annotated samples (reduction in 3.3x amount of annotation effort) with a high F-score of 83%, outperforming state-of-the-art models.

PDF Abstract