On Generation of Adversarial Examples using Convex Programming

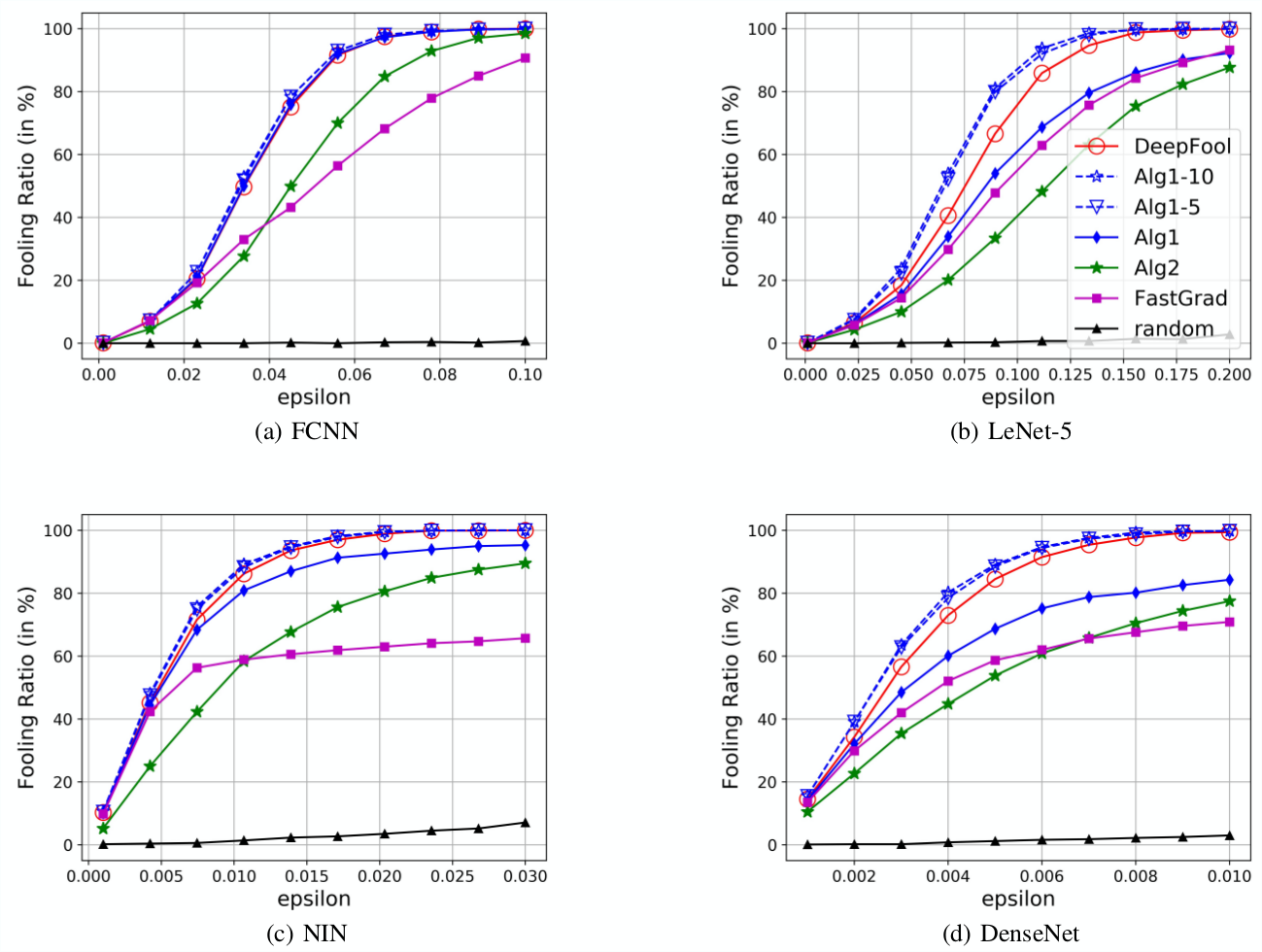

It has been observed that deep learning architectures tend to make erroneous decisions with high reliability for particularly designed adversarial instances. In this work, we show that the perturbation analysis of these architectures provides a framework for generating adversarial instances by convex programming which, for classification tasks, is able to recover variants of existing non-adaptive adversarial methods. The proposed framework can be used for the design of adversarial noise under various desirable constraints and different types of networks. Moreover, this framework is capable of explaining various existing adversarial methods and can be used to derive new algorithms as well. We make use of these results to obtain novel algorithms. The experiments show the competitive performance of the obtained solutions, in terms of fooling ratio, when benchmarked with well-known adversarial methods.

PDF Abstract

CIFAR-10

CIFAR-10