Non-Normal Mixtures of Experts

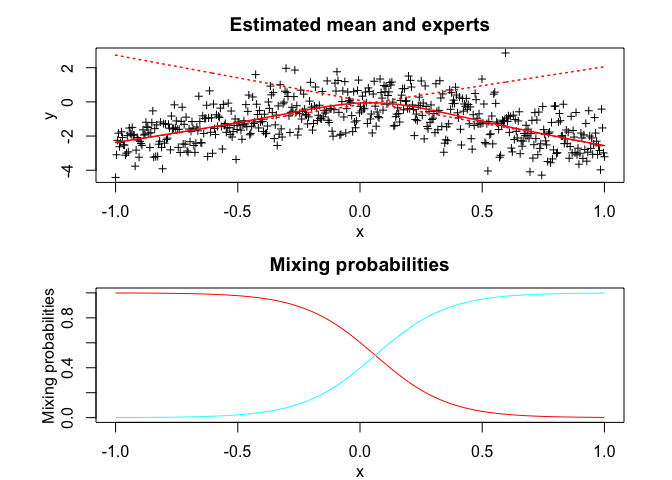

Mixture of Experts (MoE) is a popular framework for modeling heterogeneity in data for regression, classification and clustering. For continuous data which we consider here in the context of regression and cluster analysis, MoE usually use normal experts, that is, expert components following the Gaussian distribution. However, for a set of data containing a group or groups of observations with asymmetric behavior, heavy tails or atypical observations, the use of normal experts may be unsuitable and can unduly affect the fit of the MoE model. In this paper, we introduce new non-normal mixture of experts (NNMoE) which can deal with these issues regarding possibly skewed, heavy-tailed data and with outliers. The proposed models are the skew-normal MoE and the robust $t$ MoE and skew $t$ MoE, respectively named SNMoE, TMoE and STMoE. We develop dedicated expectation-maximization (EM) and expectation conditional maximization (ECM) algorithms to estimate the parameters of the proposed models by monotonically maximizing the observed data log-likelihood. We describe how the presented models can be used in prediction and in model-based clustering of regression data. Numerical experiments carried out on simulated data show the effectiveness and the robustness of the proposed models in terms modeling non-linear regression functions as well as in model-based clustering. Then, to show their usefulness for practical applications, the proposed models are applied to the real-world data of tone perception for musical data analysis, and the one of temperature anomalies for the analysis of climate change data.

PDF Abstract