NeAF: Learning Neural Angle Fields for Point Normal Estimation

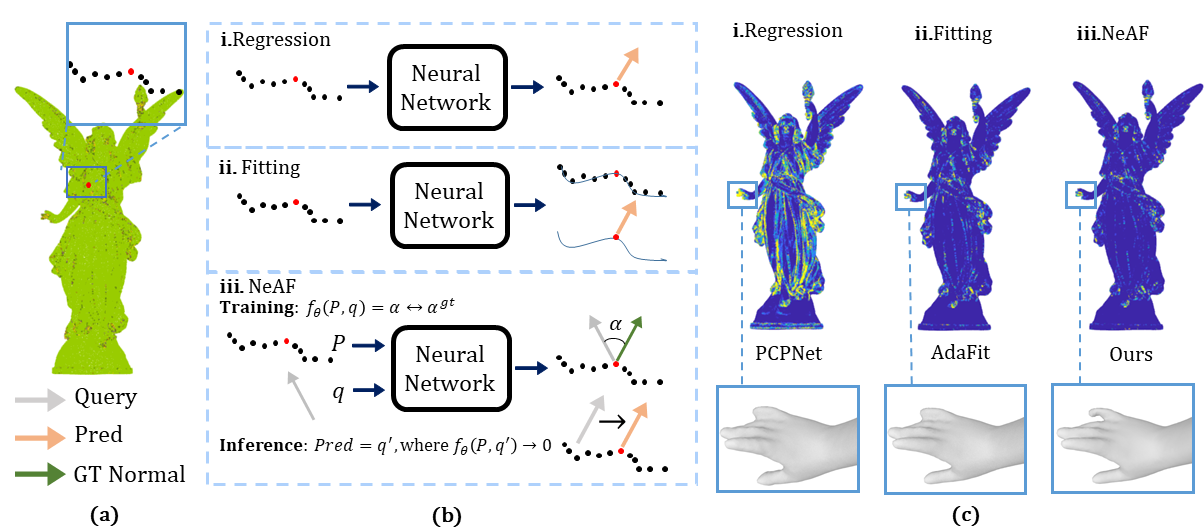

Normal estimation for unstructured point clouds is an important task in 3D computer vision. Current methods achieve encouraging results by mapping local patches to normal vectors or learning local surface fitting using neural networks. However, these methods are not generalized well to unseen scenarios and are sensitive to parameter settings. To resolve these issues, we propose an implicit function to learn an angle field around the normal of each point in the spherical coordinate system, which is dubbed as Neural Angle Fields (NeAF). Instead of directly predicting the normal of an input point, we predict the angle offset between the ground truth normal and a randomly sampled query normal. This strategy pushes the network to observe more diverse samples, which leads to higher prediction accuracy in a more robust manner. To predict normals from the learned angle fields at inference time, we randomly sample query vectors in a unit spherical space and take the vectors with minimal angle values as the predicted normals. To further leverage the prior learned by NeAF, we propose to refine the predicted normal vectors by minimizing the angle offsets. The experimental results with synthetic data and real scans show significant improvements over the state-of-the-art under widely used benchmarks.

PDF Abstract

Semantic3D

Semantic3D

SceneNN

SceneNN