Multimodal Compact Bilinear Pooling for Visual Question Answering and Visual Grounding

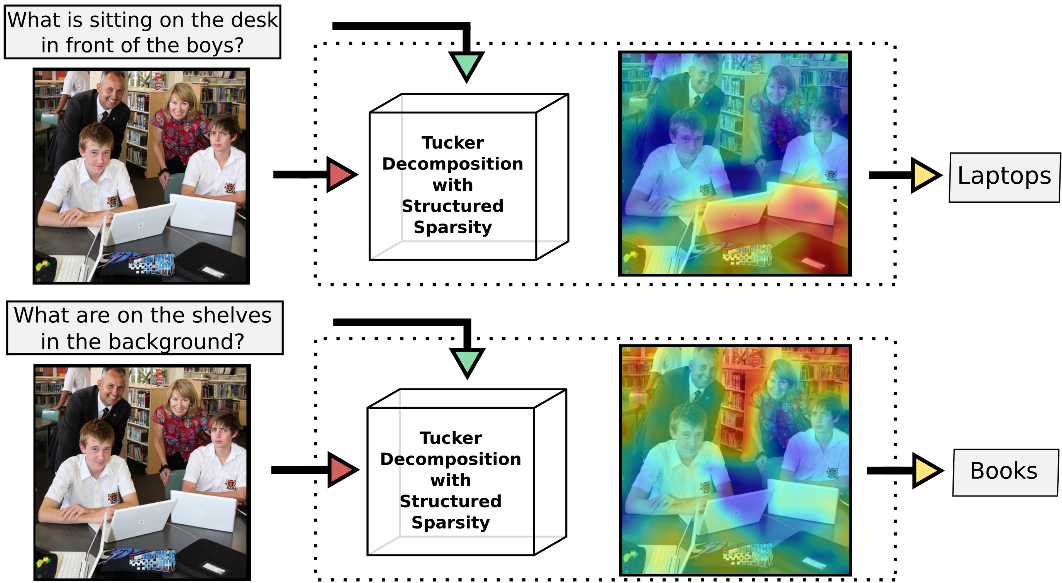

Modeling textual or visual information with vector representations trained from large language or visual datasets has been successfully explored in recent years. However, tasks such as visual question answering require combining these vector representations with each other. Approaches to multimodal pooling include element-wise product or sum, as well as concatenation of the visual and textual representations. We hypothesize that these methods are not as expressive as an outer product of the visual and textual vectors. As the outer product is typically infeasible due to its high dimensionality, we instead propose utilizing Multimodal Compact Bilinear pooling (MCB) to efficiently and expressively combine multimodal features. We extensively evaluate MCB on the visual question answering and grounding tasks. We consistently show the benefit of MCB over ablations without MCB. For visual question answering, we present an architecture which uses MCB twice, once for predicting attention over spatial features and again to combine the attended representation with the question representation. This model outperforms the state-of-the-art on the Visual7W dataset and the VQA challenge.

PDF Abstract EMNLP 2016 PDF EMNLP 2016 Abstract

MS COCO

MS COCO

Visual Question Answering

Visual Question Answering

Visual Genome

Visual Genome

Flickr30k

Flickr30k

Visual Question Answering v2.0

Visual Question Answering v2.0

Flickr30K Entities

Flickr30K Entities

Visual7W

Visual7W