Multimodal Chain-of-Thought Reasoning in Language Models

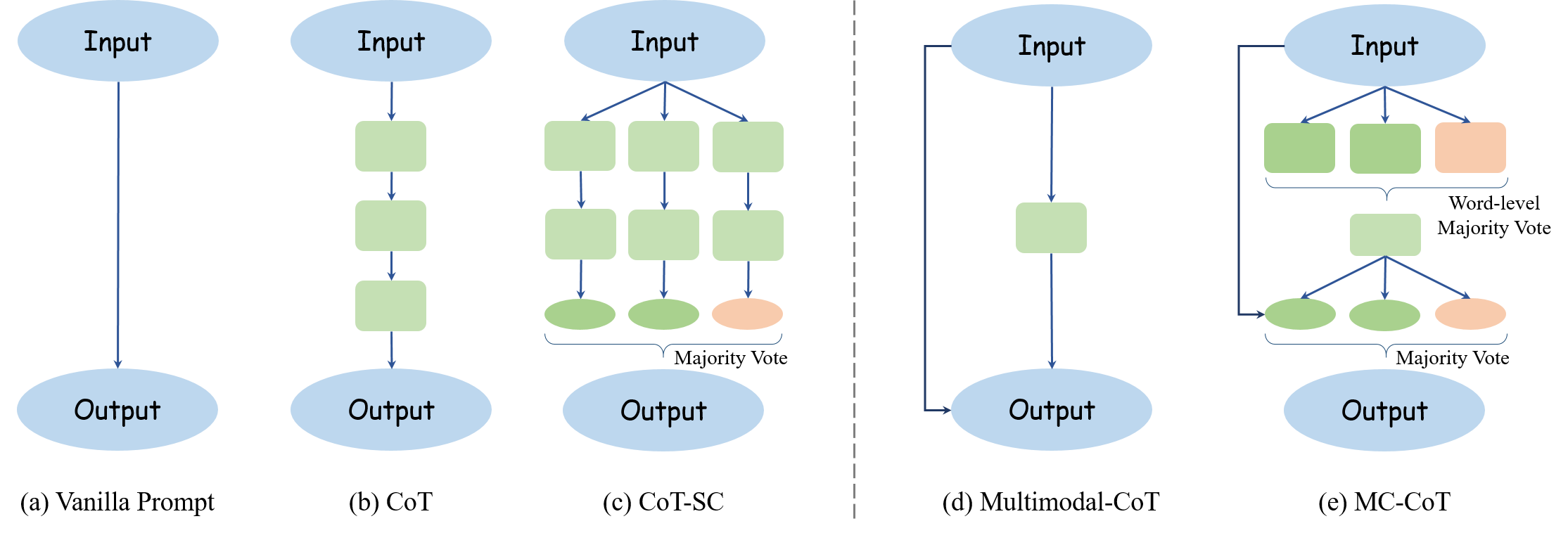

Large language models (LLMs) have shown impressive performance on complex reasoning by leveraging chain-of-thought (CoT) prompting to generate intermediate reasoning chains as the rationale to infer the answer. However, existing CoT studies have focused on the language modality. We propose Multimodal-CoT that incorporates language (text) and vision (images) modalities into a two-stage framework that separates rationale generation and answer inference. In this way, answer inference can leverage better generated rationales that are based on multimodal information. With Multimodal-CoT, our model under 1 billion parameters outperforms the previous state-of-the-art LLM (GPT-3.5) by 16 percentage points (75.17%->91.68% accuracy) on the ScienceQA benchmark and even surpasses human performance. Code is publicly available available at https://github.com/amazon-science/mm-cot.

PDF AbstractCode

Datasets

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Science Question Answering | ScienceQA | Multimodal CoT | Natural Science | 95.91 | # 1 | |

| Social Science | 82.00 | # 3 | ||||

| Language Science | 90.82 | # 2 | ||||

| Text Context | 95.26 | # 1 | ||||

| Image Context | 88.80 | # 2 | ||||

| No Context | 92.89 | # 2 | ||||

| Grades 1-6 | 92.44 | # 2 | ||||

| Grades 7-12 | 90.31 | # 3 | ||||

| Avg. Accuracy | 91.68 | # 3 |

ScienceQA

ScienceQA