Multi-view to Novel view: Synthesizing Novel Views with Self-Learned Confidence

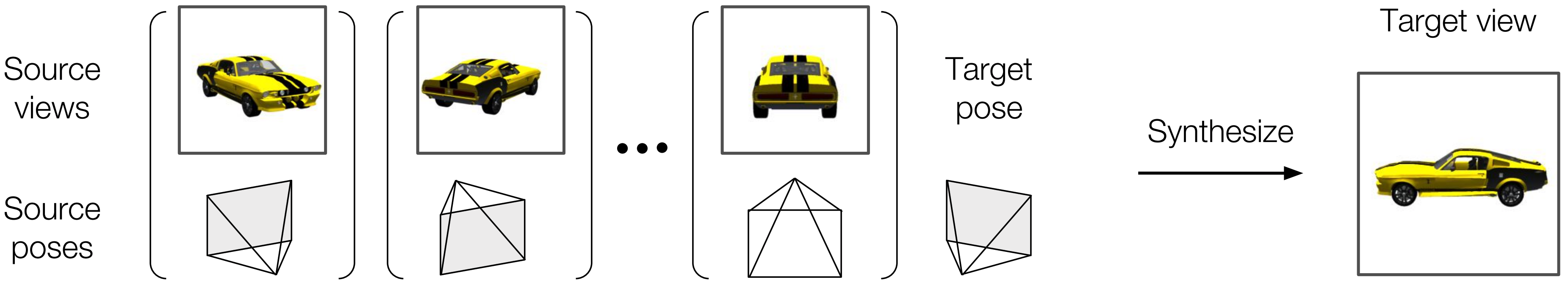

We address the task of multi-view novel view synthesis, where we are interested in synthesizing a target image with an arbitrary camera pose from given source images. We propose an end-to-end trainable framework that learns to exploit multiple viewpoints to synthesize a novel view without any 3D supervision. Specifically, our model consists of a flow prediction module and a pixel generation module to directly leverage information presented in source views as well as hallucinate missing pixels from statistical priors. To merge the predictions produced by the two modules given multi-view source images, we introduce a self-learned confidence aggregation mechanism. We evaluate our model on images rendered from 3D object models as well as real and synthesized scenes. We demonstrate that our model is able to achieve state-of-the-art results as well as progressively improve its predictions when more source images are available.

PDF Abstract Proceedings of 2018 PDF Proceedings of 2018 AbstractCode

Tasks

Results from the Paper

| Task | Dataset | Model | Metric Name | Metric Value | Global Rank | Benchmark |

|---|---|---|---|---|---|---|

| Novel View Synthesis | KITTI Novel View Synthesis | Multi-view to Novel View | SSIM | 0.626 | # 1 | |

| Novel View Synthesis | ShapeNet Car | Multi-view to Novel View | SSIM | 0.923 | # 1 | |

| Novel View Synthesis | ShapeNet Chair | Multi-view to Novel View | SSIM | 0.895 | # 1 | |

| Novel View Synthesis | Synthia Novel View Synthesis | Multi-view to Novel View | SSIM | 0.697 | # 1 |

KITTI

KITTI

ShapeNet

ShapeNet

SYNTHIA

SYNTHIA