MLIC: Multi-Reference Entropy Model for Learned Image Compression

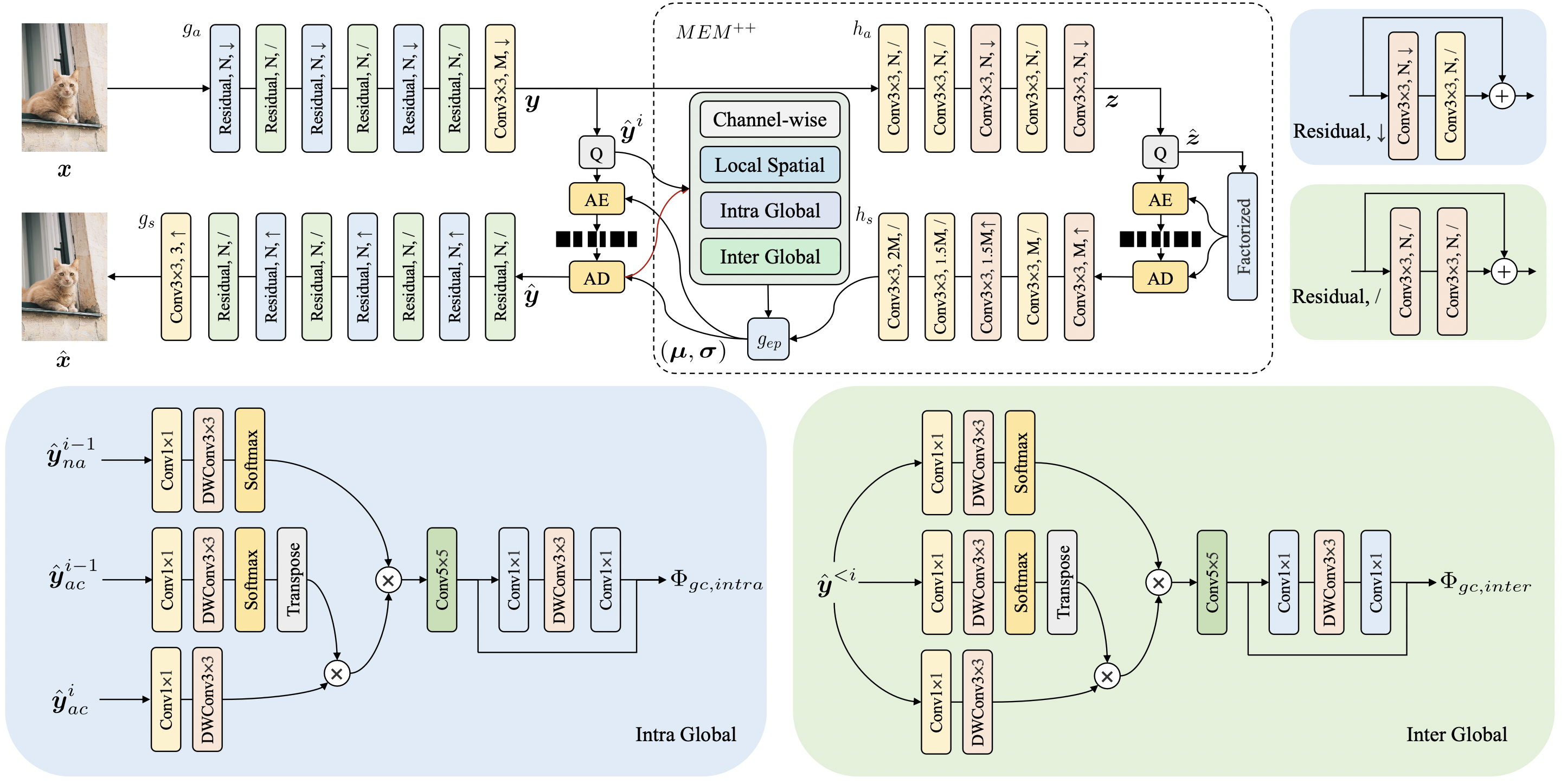

Recently, learned image compression has achieved remarkable performance. The entropy model, which estimates the distribution of the latent representation, plays a crucial role in boosting rate-distortion performance. However, most entropy models only capture correlations in one dimension, while the latent representation contain channel-wise, local spatial, and global spatial correlations. To tackle this issue, we propose the Multi-Reference Entropy Model (MEM) and the advanced version, MEM$^+$. These models capture the different types of correlations present in latent representation. Specifically, We first divide the latent representation into slices. When decoding the current slice, we use previously decoded slices as context and employ the attention map of the previously decoded slice to predict global correlations in the current slice. To capture local contexts, we introduce two enhanced checkerboard context capturing techniques that avoids performance degradation. Based on MEM and MEM$^+$, we propose image compression models MLIC and MLIC$^+$. Extensive experimental evaluations demonstrate that our MLIC and MLIC$^+$ models achieve state-of-the-art performance, reducing BD-rate by $8.05\%$ and $11.39\%$ on the Kodak dataset compared to VTM-17.0 when measured in PSNR. Our code is available at https://github.com/JiangWeibeta/MLIC.

PDF Abstract

Kodak

Kodak